After completing this chapter, you should be able to: 1. Describe when intellectual critical appraisals of studies are conducted in nursing. 2. Implement key principles in critically appraising quantitative and qualitative studies. 3. Describe the three steps for critically appraising a study: (1) identifying the steps of the research process in the study; (2) determining study strengths and weaknesses; and (3) evaluating the credibility and meaning of the study findings. 4. Conduct a critical appraisal of a quantitative research report. 5. Conduct a critical appraisal of a qualitative research report. Critical appraisal of qualitative studies, p. 389 Critical appraisal of quantitative studies, p. 362 Determining strengths and weaknesses in the studies, p. 370 Evaluating the credibility and meaning of study findings, p. 374 Identifying the steps of the research process in studies, p. 366 Intellectual critical appraisal of a study, p. 365 Qualitative research critical appraisal process, p. 389 Quantitative research critical appraisal process, p. 366 The nursing profession continually strives for evidence-based practice (EBP), which includes critically appraising studies, synthesizing the findings, applying the scientific evidence in practice, and determining the practice outcomes (Brown, 2014; Doran, 2011; Melnyk & Fineout-Overholt, 2011). Critically appraising studies is an essential step toward basing your practice on current research findings. The term critical appraisal or critique is an examination of the quality of a study to determine the credibility and meaning of the findings for nursing. Critique is often associated with criticize, a word that is frequently viewed as negative. In the arts and sciences, however, critique is associated with critical thinking and evaluation—tasks requiring carefully developed intellectual skills. This type of critique is referred to as an intellectual critical appraisal. An intellectual critical appraisal is directed at the element that is created, such as a study, rather than at the creator, and involves the evaluation of the quality of that element. For example, it is possible to conduct an intellectual critical appraisal of a work of art, an essay, and a study. The idea of the intellectual critical appraisal of research was introduced earlier in this text and has been woven throughout the chapters. As each step of the research process was introduced, guidelines were provided to direct the critical appraisal of that aspect of a research report. This chapter summarizes and builds on previous critical appraisal content and provides direction for conducting critical appraisals of quantitative and qualitative studies. The background provided by this chapter serves as a foundation for the critical appraisal of research syntheses (systematic reviews, meta-analyses, meta-syntheses, and mixed-methods systematic reviews) presented in Chapter 13. One aspect of learning the research process is being able to read and comprehend published research reports. However, conducting a critical appraisal of a study is not a basic skill, and the content presented in previous chapters is essential for implementing this process. Students usually acquire basic knowledge of the research process and critical appraisal process in their baccalaureate program. More advanced analysis skills are often taught at the master’s and doctoral levels. Performing a critical appraisal of a study involves the following three steps, which are detailed in this chapter: (1) identifying the steps or elements of the study; (2) determining the study strengths and limitations; and (3) evaluating the credibility and meaning of the study findings. By critically appraising studies, you will expand your analysis skills, strengthen your knowledge base, and increase your use of research evidence in practice. Striving for EBP is one of the competencies identified for associate degree and baccalaureate degree (prelicensure) students by the Quality and Safety Education for Nurses (QSEN, 2013) project, and EBP requires critical appraisal and synthesis of study findings for practice (Sherwood & Barnsteiner, 2012). Therefore critical appraisal of studies is an important part of your education and your practice as a nurse. Practicing nurses need to appraise studies critically so that their practice is based on current research evidence and not on tradition or trial and error (Brown, 2014; Craig & Smyth, 2012). Nursing actions need to be updated in response to current evidence that is generated through research and theory development. It is important for practicing nurses to design methods for remaining current in their practice areas. Reading research journals and posting or e-mailing current studies at work can increase nurses’ awareness of study findings but are not sufficient for critical appraisal to occur. Nurses need to question the quality of the studies, credibility of the findings, and meaning of the findings for practice. For example, nurses might form a research journal club in which studies are presented and critically appraised by members of the group (Gloeckner & Robinson, 2010). Skills in critical appraisal of research enable practicing nurses to synthesize the most credible, significant, and appropriate evidence for use in their practice. EBP is essential in agencies that are seeking or maintaining Magnet status. The Magnet Recognition Program was developed by the American Nurses Credentialing Center (ANCC, 2013) to “recognize healthcare organizations for quality patient care, nursing excellence, and innovations in professional nursing,” which requires implementing the most current research evidence in practice (see http://www.nursecredentialing.org/Magnet/ProgramOverview.aspx). Critical appraisals have been published following some studies in research journals. For example, the research journals Scholarly Inquiry for Nursing Practice: An International Journal and Western Journal of Nursing Research include commentaries after the research articles. In these commentaries, other researchers critically appraise the authors’ studies, and the authors have a chance to respond to these comments. Published research critical appraisals often increase the reader’s understanding of the study and the quality of the study findings (American Psychological Association [APA], 2010). A more informal critical appraisal of a published study might appear in a letter to the editor. Readers have the opportunity to comment on the strengths and weaknesses of published studies by writing to the journal editor. Planners of professional conferences often invite researchers to submit an abstract of a study they are conducting or have completed for potential presentation at the conference. The amount of information available is usually limited, because many abstracts are restricted to 100 to 250 words. Nevertheless, reviewers must select the best-designed studies with the most significant outcomes for presentation at nursing conferences. This process requires an experienced researcher who needs few cues to determine the quality of a study. Critical appraisal of an abstract usually addresses the following criteria: (1) appropriateness of the study for the conference program; (2) completeness of the research project; (3) overall quality of the study problem, purpose, methodology, and results; (4) contribution of the study to nursing’s knowledge base; (5) contribution of the study to nursing theory; (6) originality of the work (not previously published); (7) implication of the study findings for practice; and (8) clarity, conciseness, and completeness of the abstract (APA, 2010; Grove, Burns, & Gray, 2013). Some nurse researchers serve as peer reviewers for professional journals to evaluate the quality of research papers submitted for publication. The role of these scientists is to ensure that the studies accepted for publication are well designed and contribute to the body of knowledge. Journals that have their articles critically appraised by expert peer reviews are called peer-reviewed journals or referred journals (Pyrczak, 2008). The reviewers’ comments or summaries of their comments are sent to the researchers to direct their revision of the manuscripts for publication. Referred journals usually have studies and articles of higher quality and provide excellent studies for your review for practice. Critical appraisals of research proposals are conducted to approve student research projects, permit data collection in an institution, and select the best studies for funding by local, state, national, and international organizations and agencies. You might be involved in a proposal review if you are participating in collecting data as part of a class project or studies done in your clinical agency. More details on proposal development and approval can be found in Grove et al. (2013, Chapter 28). Research proposals are reviewed for funding from selected government agencies and corporations. Private corporations develop their own format for reviewing and funding research projects (Grove et al., 2013). The peer review process in federal funding agencies involves an extremely complex critical appraisal. Nurses are involved in this level of research review through national funding agencies, such as the National Institute of Nursing Research (NINR, 2013) and the Agency for Healthcare Research and Quality (AHRQ, 2013). An intellectual critical appraisal of a study involves a careful and complete examination of a study to judge its strengths, weaknesses, credibility, meaning, and significance for practice. A high-quality study focuses on a significant problem, demonstrates sound methodology, produces credible findings, indicates implications for practice, and provides a basis for additional studies (Grove et al., 2013; Hoare & Hoe, 2013; Hoe & Hoare, 2012). Ultimately, the findings from several quality studies can be synthesized to provide empirical evidence for use in practice (O’Mathuna, Fineout-Overholt, & Johnston, 2011). The major focus of this chapter is conducting critical appraisals of quantitative and qualitative studies. These critical appraisals involve implementing some key principles or guidelines, outlined in Box 12-1. These guidelines stress the importance of examining the expertise of the authors, reviewing the entire study, addressing the study’s strengths and weaknesses, and evaluating the credibility of the study findings (Fawcett & Garity, 2009; Hoare & Hoe, 2013; Hoe & Hoare, 2012; Munhall, 2012). All studies have weaknesses or flaws; if every flawed study were discarded, no scientific evidence would be available for use in practice. In fact, science itself is flawed. Science does not completely or perfectly describe, explain, predict, or control reality. However, improved understanding and increased ability to predict and control phenomena depend on recognizing the flaws in studies and science. Additional studies can then be planned to minimize the weaknesses of earlier studies. You also need to recognize a study’s strengths to determine the quality of a study and credibility of its findings. When identifying a study’s strengths and weaknesses, you need to provide examples and rationale for your judgments that are documented with current literature. Critical appraisal of quantitative and qualitative studies involves a final evaluation to determine the credibility of the study findings and any implications for practice and further research (see Box 12-1). Adding together the strong points from multiple studies slowly builds a solid base of evidence for practice. These guidelines provide a basis for the critical appraisal process for quantitative research discussed in the next section and the critical appraisal process for qualitative research (see later). • Was the abstract clearly presented? • Was the writing style of the report clear and concise? • Were relevant terms defined? You might underline the terms you do not understand and determine their meaning from the glossary at the end of this text. • Were the following parts of the research report plainly identified (APA, 2010)? • Introduction section, with the problem, purpose, literature review, framework, study variables, and objectives, questions, or hypotheses • Methods section, with the design, sample, intervention (if applicable), measurement methods, and data collection or procedures • Results section, with the specific results presented in tables, figures, and narrative • Discussion section, with the findings, conclusions, limitations, generalizations, implications for practice, and suggestions for future research (Fawcett & Garity, 2009; Grove et al., 2013) We recommend reading the research article a second time and highlighting or underlining the steps of the quantitative research process that were identified previously. An overview of these steps is presented in Chapter 2. After reading and comprehending the content of the study, you are ready to write your initial critical appraisal of the study. To write a critical appraisal identifying the study steps, you need to identify each step of the research process concisely and respond briefly to the following guidelines and questions. a. Describe the qualifications of the authors to conduct the study (e.g., research expertise conducting previous studies, clinical experience indicated by job, national certification, and years in practice, and educational preparation that includes conducting research [PhD]). b. Discuss the clarity of the article title. Is the title clearly focused and does it include key study variables and population? Does the title indicate the type of study conducted—descriptive, correlational, quasi-experimental, or experimental—and the variables (Fawcett & Garity, 2009; Hoe & Hoare, 2012; Shadish, Cook, & Campbell, 2002)? c. Discuss the quality of the abstract (includes purpose, highlights design, sample, and intervention [if applicable], and presents key results; APA, 2010). 4. Examine the literature review. a. Are relevant previous studies and theories described? b. Are the references current (number and percentage of sources in the last 5 and 10 years)? c. Are the studies described, critically appraised, and synthesized (Brown, 2014; Fawcett & Garity, 2009)? Are the studies from referred journals? d. Is a summary provided of the current knowledge (what is known and not known) about the research problem? 5. Examine the study framework or theoretical perspective. a. Is the framework explicitly expressed, or must you extract the framework from statements in the introduction or literature review of the study? b. Is the framework based on tentative, substantive, or scientific theory? Provide a rationale for your answer. c. Does the framework identify, define, and describe the relationships among the concepts of interest? Provide examples of this. d. Is a map of the framework provided for clarity? If a map is not presented, develop a map that represents the study’s framework and describe the map. e. Link the study variables to the relevant concepts in the map. f. How is the framework related to nursing’s body of knowledge (Alligood, 2010; Fawcett & Garity, 2009; Smith & Liehr, 2008)? 6. List any research objectives, questions, or hypotheses. 7. Identify and define (conceptually and operationally) the study variables or concepts that were identified in the objectives, questions, or hypotheses. If objectives, questions, or hypotheses are not stated, identify and define the variables in the study purpose and results section of the study. If conceptual definitions are not found, identify possible definitions for each major study variable. Indicate which of the following types of variables were included in the study. A study usually includes independent and dependent variables or research variables, but not all three types of variables. a. Independent variables: Identify and define conceptually and operationally. b. Dependent variables: Identify and define conceptually and operationally. c. Research variables or concepts: Identify and define conceptually and operationally. 8. Identify attribute or demographic variables and other relevant terms. 9. Identify the research design. a. Identify the specific design of the study (see Chapter 8). b. Does the study include a treatment or intervention? If so, is the treatment clearly described with a protocol and consistently implemented? c. If the study has more than one group, how were subjects assigned to groups? d. Are extraneous variables identified and controlled? Extraneous variables are usually discussed as a part of quasi-experimental and experimental studies. e. Were pilot study findings used to design this study? If yes, briefly discuss the pilot and the changes made in this study based on the pilot (Grove et al., 2013; Shadish et al., 2002). 10. Describe the sample and setting. a. Identify inclusion and exclusion sample or eligibility criteria. b. Identify the specific type of probability or nonprobability sampling method that was used to obtain the sample. Did the researchers identify the sampling frame for the study? c. Identify the sample size. Discuss the refusal number and percentage, and include the rationale for refusal if presented in the article. Discuss the power analysis if this process was used to determine sample size (Aberson, 2010). d. Identify the sample attrition (number and percentage) for the study. e. Identify the characteristics of the sample. f. Discuss the institutional review board (IRB) approval. Describe the informed consent process used in the study. g. Identify the study setting and indicate whether it is appropriate for the study purpose. 11. Identify and describe each measurement strategy used in the study. The following table includes the critical information about two measurement methods, the Beck Likert scale and a physiological instrument to measure blood pressure. Completing this table will allow you to cover essential measurement content for a study (Waltz, Strickland, & Lenz, 2010). a. Identify each study variable that was measured. b. Identify the name and author of each measurement strategy. c. Identify the type of each measurement strategy (e.g., Likert scale, visual analog scale, physiological measure, or existing database). d. Identify the level of measurement (nominal, ordinal, interval, or ratio) achieved by each measurement method used in the study (Grove, 2007). e. Describe the reliability of each scale for previous studies and this study. Identify the precision of each physiological measure (Bialocerkowski, Klupp, & Bragge, 2010; DeVon et al., 2007). f. Identify the validity of each scale and the accuracy of physiological measures (DeVon et al., 2007; Ryan-Wenger, 2010). 12. Describe the procedures for data collection. 13. Describe the statistical analyses used. a. List the statistical procedures used to describe the sample (Grove, 2007). b. Was the level of significance or alpha identified? If so, indicate what it was (0.05, 0.01, or 0.001). c. Complete the following table with the analysis techniques conducted in the study: (1) identify the focus (description, relationships, or differences) for each analysis technique; (2) list the statistical analysis technique performed; (3) list the statistic; (4) provide the specific results; and (5) identify the probability (p) of the statistical significance achieved by the result (Grove, 2007; Grove et al., 2013; Hoare & Hoe, 2013; Plichta & Kelvin, 2013). 14. Describe the researcher’s interpretation of findings. a. Are the findings related back to the study framework? If so, do the findings support the study framework? b. Which findings are consistent with those expected? c. Which findings were not expected? d. Are the findings consistent with previous research findings? (Fawcett & Garity, 2009; Grove et al., 2013; Hoare & Hoe, 2013) 15. What study limitations did the researcher identify? 16. What conclusions did the researchers identify based on their interpretation of the study findings? 17. How did the researcher generalize the findings? 18. What were the implications of the findings for nursing practice? 19. What suggestions for further study were identified? 20. Is the description of the study sufficiently clear for replication? The second step in critically appraising studies requires determining strengths and weaknesses in the studies. To do this, you must have knowledge of what each step of the research process should be like from expert sources such as this text and other research sources (Aberson, 2010; Bialocerkowski et al., 2010; Brown, 2014; Creswell, 2014; DeVon et al., 2007; Doran, 2011; Fawcett & Garity, 2009; Grove, 2007; Grove et al., 2013; Hoare & Hoe, 2013; Hoe & Hoare, 2012; Morrison, Hoppe, Gillmore, Kluver, Higa, & Wells, 2009; O’Mathuna et al., 2011; Ryan-Wenger, 2010; Santacroce, Maccarelli, & Grey, 2004; Shadish et al., 2002; Waltz et al., 2010). The ideal ways to conduct the steps of the research process are then compared with the actual study steps. During this comparison, you examine the extent to which the researcher followed the rules for an ideal study, and the study elements are examined for strengths and weaknesses. The following questions were developed to help you examine the different steps of a study and determine its strengths and weaknesses. The intent is not for you to answer each of these questions but to read the questions and then make judgments about the steps in the study. You need to provide a rationale for your decisions and document from relevant research sources, such as those listed previously in this section and in the references at the end of this chapter. For example, you might decide that the study purpose is a strength because it addresses the study problem, clarifies the focus of the study, and is feasible to investigate (Brown, 2014; Fawcett & Garity, 2009; Hoe & Hoare, 2012). 1. Research problem and purpose a. Is the problem significant to nursing and clinical practice (Brown, 2014)? b. Does the purpose narrow and clarify the focus of the study (Creswell, 2014; Fawcett & Garity, 2009)? c. Was this study feasible to conduct in terms of money commitment, the researchers’ expertise; availability of subjects, facilities, and equipment; and ethical considerations? a. Is the literature review organized to demonstrate the progressive development of evidence from previous research (Brown, 2014; Creswell, 2014; Hoe & Hoare, 2012)? b. Is a clear and concise summary presented of the current empirical and theoretical knowledge in the area of the study (O’Mathuna et al., 2011)? c. Does the literature review summary identify what is known and not known about the research problem and provide direction for the formation of the research purpose? a. Is the framework presented with clarity? If a model or conceptual map of the framework is present, is it adequate to explain the phenomenon of concern (Grove et al., 2013)? b. Is the framework related to the body of knowledge in nursing and clinical practice? c. If a proposition from a theory is to be tested, is the proposition clearly identified and linked to the study hypotheses (Alligood, 2010; Fawcett & Garity, 2009; Smith & Liehr, 2008)? 4. Research objectives, questions, or hypotheses a. Are the objectives, questions, or hypotheses expressed clearly? b. Are the objectives, questions, or hypotheses logically linked to the research purpose? c. Are hypotheses stated to direct the conduct of quasi-experimental and experimental research (Creswell, 2014; Shadish et al., 2002)? d. Are the objectives, questions, or hypotheses logically linked to the concepts and relationships (propositions) in the framework (Chinn & Kramer, 2011; Fawcett & Garity, 2009; Smith & Liehr, 2008)? a. Are the variables reflective of the concepts identified in the framework? b. Are the variables clearly defined (conceptually and operationally) and based on previous research or theories (Chinn & Kramer, 2011; Grove et al., 2013; Smith & Liehr, 2008)? c. Is the conceptual definition of a variable consistent with the operational definition? a. Is the design used in the study the most appropriate design to obtain the needed data (Creswell, 2014; Grove et al., 2013; Hoe & Hoare, 2012)? b. Does the design provide a means to examine all the objectives, questions, or hypotheses? c. Is the treatment clearly described (Brown, 2002)? Is the treatment appropriate for examining the study purpose and hypotheses? Does the study framework explain the links between the treatment (independent variable) and the proposed outcomes (dependent variables)? Was a protocol developed to promote consistent implementation of the treatment to ensure intervention fidelity (Morrison et al., 2009)? Did the researcher monitor implementation of the treatment to ensure consistency (Santacroce et al., 2004)? If the treatment was not consistently implemented, what might be the impact on the findings? d. Did the researcher identify the threats to design validity (statistical conclusion validity, internal validity, construct validity, and external validity [see Chapter 8]) and minimize them as much as possible (Grove et al., 2013; Shadish et al., 2002)? e. If more than one group was used, did the groups appear equivalent? f. If a treatment was implemented, were the subjects randomly assigned to the treatment group or were the treatment and comparison groups matched? Were the treatment and comparison group assignments appropriate for the purpose of the study? 7. Sample, population, and setting a. Is the sampling method adequate to produce a representative sample? Are any subjects excluded from the study because of age, socioeconomic status, or ethnicity, without a sound rationale? b. Did the sample include an understudied population, such as young people, older adults, or minority group? c. Were the sampling criteria (inclusion and exclusion) appropriate for the type of study conducted (O’Mathuna et al., 2011)? d. Was a power analysis conducted to determine sample size? If a power analysis was conducted, were the results of the analysis clearly described and used to determine the final sample size? Was the attrition rate projected in determining the final sample size (Aberson, 2010)? e. Are the rights of human subjects protected (Creswell, 2014; Grove et al., 2013)? f. Is the setting used in the study typical of clinical settings? g. Was the rate of potential subjects’ refusal to participate in the study a problem? If so, how might this weakness influence the findings? h. Was sample attrition a problem? If so, how might this weakness influence the final sample and the study results and findings (Aberson, 2010; Fawcett & Garity, 2009; Hoe & Hoare, 2012)? a. Do the measurement methods selected for the study adequately measure the study variables? Should additional measurement methods have been used to improve the quality of the study outcomes (Waltz et al., 2010)? b. Do the measurement methods used in the study have adequate validity and reliability? What additional reliability or validity testing is needed to improve the quality of the measurement methods (Bialocerkowski et al., 2010; DeVon et al., 2007; Roberts & Stone, 2003)? c. Respond to the following questions, which are relevant to the measurement approaches used in the study: (a) Are the instruments clearly described? (b) Are techniques to complete and score the instruments provided? (c) Are the validity and reliability of the instruments described (DeVon et al., 2007)? (d) Did the researcher reexamine the validity and reliability of the instruments for the present sample? (e) If the instrument was developed for the study, is the instrument development process described (Grove et al., 2013; Waltz et al., 2010)? (a) Is what is to be observed clearly identified and defined? (b) Is interrater reliability described? (c) Are the techniques for recording observations described (Waltz et al., 2010)? (a) Do the interview questions address concerns expressed in the research problem? (b) Are the interview questions relevant for the research purpose and objectives, questions, or hypotheses (Grove et al., 2013; Waltz et al., 2010)? (a) Are the physiological measures or instruments clearly described (Ryan-Wenger, 2010)? If appropriate, are the brand names of the instruments identified, such as Space Labs or Hewlett-Packard? (b) Are the accuracy, precision, and error of the physiological instruments discussed (Ryan-Wenger, 2010)? (c) Are the physiological measures appropriate for the research purpose and objectives, questions, or hypotheses? (d) Are the methods for recording data from the physiological measures clearly described? Is the recording of data consistent? a. Is the data collection process clearly described (Fawcett & Garity, 2009; Grove et al., 2013)? b. Are the forms used to collect data organized to facilitate computerizing the data? c. Is the training of data collectors clearly described and adequate? d. Is the data collection process conducted in a consistent manner? e. Are the data collection methods ethical? f. Do the data collected address the research objectives, questions, or hypotheses? g. Did any adverse events occur during data collection, and were these appropriately managed? a. Are data analysis procedures appropriate for the type of data collected (Grove, 2007; Hoare & Hoe, 2013; Plichta & Kelvin, 2013)? b. Are data analysis procedures clearly described? Did the researcher address any problem with missing data, and explain how this problem was managed? c. Do the data analysis techniques address the study purpose and the research objectives, questions, or hypotheses (Fawcett & Garity, 2009; Grove et al., 2013; Hoare & Hoe, 2013)? d. Are the results presented in an understandable way by narrative, tables, or figures or a combination of methods (APA, 2010)? e. Is the sample size sufficient to detect significant differences, if they are present? f. Was a power analysis conducted for nonsignificant results (Aberson, 2010)?

Critical Appraisal of Quantitative and Qualitative Research for Nursing Practice

When are Critical Appraisals of Studies Implemented in Nursing?

Students’ Critical Appraisal of Studies

Critical Appraisal of Studies by Practicing Nurses, Nurse Educators, and Researchers

Critical Appraisal of Research Following Presentation and Publication

Critical Appraisal of Research for Presentation and Publication

Critical Appraisal of Research Proposals

What are the Key Principles for Conducting Intellectual Critical Appraisals of Quantitative and Qualitative Studies?

Understanding the Quantitative Research Critical Appraisal Process

Step 1: Identifying the Steps of the Research Process in Studies

Guidelines for Identifying the Steps of the Research Process in Studies

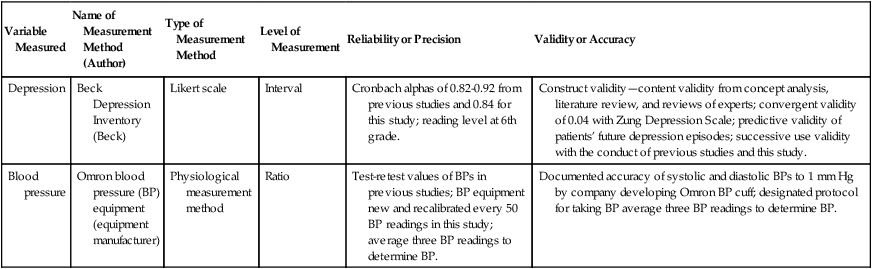

Variable Measured

Name of Measurement Method (Author)

Type of Measurement Method

Level of Measurement

Reliability or Precision

Validity or Accuracy

Depression

Beck Depression Inventory (Beck)

Likert scale

Interval

Cronbach alphas of 0.82-0.92 from previous studies and 0.84 for this study; reading level at 6th grade.

Construct validity—content validity from concept analysis, literature review, and reviews of experts; convergent validity of 0.04 with Zung Depression Scale; predictive validity of patients’ future depression episodes; successive use validity with the conduct of previous studies and this study.

Blood pressure

Omron blood pressure (BP) equipment

(equipment manufacturer)

Physiological measurement method

Ratio

Test-retest values of BPs in previous studies; BP equipment new and recalibrated every 50 BP readings in this study; average three BP readings to determine BP.

Documented accuracy of systolic and diastolic BPs to 1 mm Hg by company developing Omron BP cuff; designated protocol for taking BP average three BP readings to determine BP.

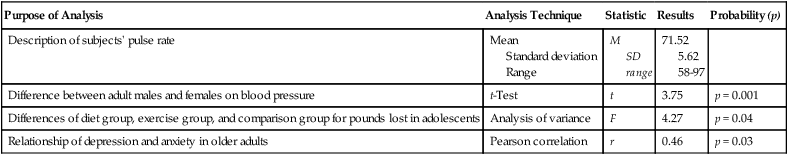

Purpose of Analysis

Analysis Technique

Statistic

Results

Probability (p)

Description of subjects’ pulse rate

Mean

Standard deviation

Range

M

SD

range

71.52

5.62

58-97

Difference between adult males and females on blood pressure

t-Test

t

3.75

p = 0.001

Differences of diet group, exercise group, and comparison group for pounds lost in adolescents

Analysis of variance

F

4.27

p = 0.04

Relationship of depression and anxiety in older adults

Pearson correlation

r

0.46

p = 0.03

Step 2: Determining the Strengths and Weaknesses in Studies

Guidelines for Determining the Strengths and Weaknesses in Studies

![]()

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree