Language and Thinking Processes

Key terms

Bias

Conceptual definition

Context specificity

Control

Dependent variable

Design

Emic

Etic

External validity

Independent variable

Instrumentation

Internal validity

Intervening variable

Manipulation

Operational definition

Rigor

Statistical conclusion validity

Validity

Variable

We now are ready to explore the language and thinking of researchers who use the range of designs relevant to health and human service inquiry. As discussed in previous chapters, significant philosophical differences exist between experimental-type and naturalistic research traditions. Experimental-type designs are characterized by thinking and action processes based in deductive logic and a positivist paradigm in which the researcher seeks to identify

a single reality through systematized observation. This reality is understood by reducing it to its parts, observing and measuring the parts, and then examining the relationship among these parts. Ultimately, the purpose of research in the experimental-type tradition is to predict what will occur in one part of the universe by knowing and observing another part.

Unlike experimental-type design, the naturalistic tradition is characterized by multiple ontological and epistemological foundations. However, naturalistic designs share common elements that are reflected in their languages and thinking processes. Researchers in the naturalistic tradition base their thinking in inductive and abductive logic and seek to understand phenomena within the context in which they are embedded. Thus, the notion of multiple realities and the attempt to characterize holistically the complexity of human experience are two elements that pervade naturalistic approaches.

Mixed-method designs draw on and integrate the language and thinking of both traditions.

Now we turn to the philosophical foundations, language, and criteria for scientific rigor for experimental-type and naturalistic traditions. “Rigor” is a term used in research that refers to procedures that enhance and are used to judge the integrity of the research design. As you read this chapter, compare and contrast the two traditions and consider the application and integration of both.

Experimental-type language and thinking processes

Within the experimental-type research tradition, there is consensus about the adequacy and scientific rigor of action processes. Thus, all designs in the experimental-type tradition share a common language and a unified perspective as to what constitutes an adequate design. Although there are many definitions of research design across the range of approaches, all types of experimental-type research share the same fundamental elements and a single, agreed-on meaning (Box 8-1).

In experimental-type research, design is the plan or blueprint that specifies and structures the action processes of collecting, analyzing, and reporting data to answer a research question. As Kerlinger stated in his classic definition, design is “the plan, structure, and strategy of investigation conceived so as to obtain answers to research questions and to control variance.”1 “Plan” refers to the blueprint for action or the specific procedures used to obtain empirical evidence. “Structure” represents a more complex concept and refers to a model of the relationships among the variables of a study. That is, the design is structured in such a way as to enable an examination of a hypothesized relationship among variables. This relationship is articulated in the research question. The main purpose of the design is to structure the study so that the researcher can answer the research question.

In the experimental-type tradition, the purpose of the design is to control variances or restrict or control extraneous influences on the study. By exerting such control, the researcher can state with a degree of statistical assuredness that study outcomes are a consequence of either the manipulation of the independent variable (e.g., true experimental design) or the consequence of that which was observed and analyzed (e.g., nonexperimental design). In other words, the design provides a degree of certainty that an investigator’s observations are not haphazard or random but reflect what is considered to be a true and objective reality. The researcher is thus concerned with developing the most optimal design that eliminates or controls what researchers refer to as disturbances, variances, extraneous factors, or situational contaminants. The design controls these disturbances or situational contaminants through the implementation of systematic procedures and data collection efforts, as discussed in subsequent chapters. The purpose of imposing control and restrictions on observations of natural phenomena is to ensure that the relationships specified in the research question(s) can be identified, understood, and ultimately predicted.

The element of design is what separates research from the everyday types of observations and thinking and action processes in which each of us engages. Design instructs the investigator to “do this” or “don’t do that.” It provides a mechanism of control to ensure that data are collected objectively, in a uniform and consistent manner, with minimal investigator involvement or bias. The important points to remember are that the investigator remains separate from and uninvolved with the phenomena under study (e.g., to control one important potential source of disturbance or situational contaminant) and that procedures and systematic data collection provide mechanisms to control and eliminate bias.

Sequence of Experimental-Type Research

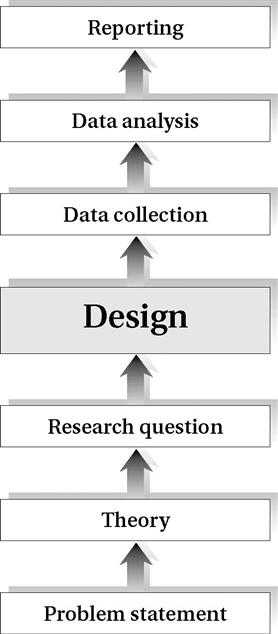

Design is pivotal in the sequence of thoughts and actions of experimental-type researchers (Figure 8-1). It stems from the thinking processes of formulating a problem statement, a theory-specific research question that emerges from scholarly literature, and hypotheses or expected outcomes. Design dictates the nature of the action processes of data collection, the conditions under which observations will be made, and, most important, the type of data analyses and reporting that will be possible.

Do you recall our previous discussion on the essentials of research? In that discussion, we illustrated how a problem statement indicates the purpose of the research and the broad topic the investigator wants to address. In experimental-type inquiry the literature review guides the selection of theoretical principles and concepts of the study and provides the rationale for a research project. This rationale is based on the nature of research previously conducted and the level of theory development for the phenomena under investigation. The experimental-type researcher must develop a literature review in which the specific theory, scope of the study, research questions, concepts to be measured, nature of the relationship among concepts, and measures that will be used in the study are discussed and supported. Thus, the researcher develops a design that builds on both the ideas that have been formulated and the actions that have been conducted in ways that conform to the rules of scientific rigor.

The choice of design not only is shaped by the literature and level of theory development but is also dependent on the specific question asked and resources or the practical constraints, such as access to target populations and monetary and staff considerations. There is nothing inherently good or bad about a design. Every research study design has its particular strengths and weaknesses. The adequacy of a design is based on how well the design answers the research question that is posed. That is the most important criteria for evaluating a design. If it does not answer the research question, then the design, regardless how rigorous it may appear, is not appropriate. It is also important to identify and understand the relative strength and weakness of each design element. Methodological decisions are purposeful and should be made with full recognition of what is gained and what is not by implementing each design element.

Structure of Experimental-Type Research

Experimental-type research has a well-developed language that sets clear rules and expectations for the adequacy of design and research procedures. As you see in Table 8-1, nine key terms structure experimental-type research designs. Let us examine the meaning of each.

TABLE 8-1

Key Terms in Structuring Experimental-Type Research

| Term | Definition |

| Concept | Symbolically represents observation and experience |

| Construct | Represents a model of relationships among two or more concepts |

| Conceptual definition | Concept expressed in words |

| Operational definition | How the concept will be measured |

| Variable | Operational definition of a concept assigned numerical values |

| Independent variable | Presumed cause of the dependent variable |

| Intervening variable | Phenomenon that has an effect on study variables |

| Dependent variable | Phenomenon that is affected by the independent variable or is the presumed effect or outcome |

| Hypothesis | Testable statement that indicates what the researcher expects to find |

Concepts

A concept is defined as the words or ideas that symbolically represent observations and experiences. Concepts are not directly observable; rather, what they describe is observed or experienced. Concepts are “(1) tentative, (2) based on agreement, and (3) useful only to the degree that they capture or isolate something significant and definable.”2 For example, the terms “grooming” and “work” are both concepts that describe specific observable or experienced activities in which people engage on a regular basis. Other concepts, such as “personal hygiene” or “sadness,” have various definitions, each of which can lead to the development of different assessment instruments to measure the same underlying concept.

Constructs

As discussed in Chapter 6, constructs are theoretical creations based on observations but cannot be observed directly or indirectly.3 A construct can only be inferred and may represent a larger category with two or more concepts or constructs.

Definitions

The two basic types of definition relevant to research design are conceptual definitions and operational definitions. A conceptual definition, or lexical definition, stipulates the meaning of a concept or construct with other concepts or constructs. An operational definition stipulates the meaning by specifying how the concept is observed or experienced. Operational definitions “define things by what they do.”

Variables

A variable is a concept or construct to which numerical values are assigned. By definition, a variable must have more than one value even if the investigator is interested in only one condition.

There are three basic types of variables: independent, intervening, and dependent. An independent variable “is the presumed cause of the dependent variable, the presumed effect.”4 Thus, a dependent variable (also referred to as “outcome” and “criterion”) refers to the phenomenon that the investigator seeks to understand, explain, or predict. The independent variable almost always precedes the dependent variable and may have a potential influence on it. The dependent variable is also referred to as the “predictor variable.” An intervening variable (also called a “confounding” or an “extraneous” variable) is a phenomenon that has an effect on the study variables but that may or may not be the object of the study.

Investigators treat intervening variables differently depending on the research question. For example, an investigator may only be interested in examining the relationship between the independent and dependent variables and may thus statistically control or account for an intervening variable. The investigator would then examine the relationship after statistically “removing” the effect of one or more potential intervening variables. However, the investigator may want to examine the effect of an intervening variable on the relationship between the independent and dependent variables. For this question, the researcher would employ statistical techniques to determine the interrelationships.

Hypotheses

A hypothesis is defined as a testable statement that indicates what the researcher expects to find, given the theory and level of knowledge in the literature. A hypothesis is stated in such a way that it will either be verified or falsified by the research process. The researcher can develop either a directional or a nondirectional hypothesis (see Chapter 7). In a directional hypothesis, the researcher indicates whether she or he expects to find a positive relationship or an inverse relationship between two or more variables. A positive relationship is one in which both variables increase and decrease together to a greater or lesser degree.

In an inverse relationship, the variables are associated in opposite directions (i.e., as one increases, the other decreases). An inverse relationship may involve a hypothesis similar to the following:

In this statement, the expectation is that as the variable “employment” increases, difficulty in self-care will decrease.

Now that we have defined nine key terms that are essential to experimental-type designs, let us review the way they are actually used in research. Experimental-type research questions narrow the scope of the inquiry to specific concepts, constructs, or both. These concepts are then defined through the literature and are operationalized into variables that will be investigated descriptively, relationally, or predictively. The hypothesis establishes an equation or structure by which independent and dependent variables are examined and tested.

Plan of Design

The plan of an experimental-type design requires a set of thinking processes in which the researcher considers five core issues: bias, manipulation, control, validity, and reliability.

Bias

Bias is defined as the potential unintended or unavoidable effect on study outcomes. When bias is present and unaccounted for, the investigator may not be able to fully understand whether the study findings are accurate or reflect sources of bias and thus may misinterpret the results. Many factors can cause bias in a study (Box 8-2). We already discussed one source of bias, the “intervening variable.”

Another source is instrumentation. This involves the ways in which data are obtained in experimental-type approaches. The two major sources of bias from instrumentation are inappropriate data collection procedures and inadequate questions.

In the situation portrayed above, the procedures for data collection are problematic and introduce bias into the study.

Interview questions that elicit a socially correct response and questions that are vague, unclear, or ambiguous introduce a source of bias into the study design.

Sampling is another major source of bias.

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree

The construct of “function” may be inferred from observing concepts such as grooming, personal hygiene, and work or asking individuals to appraise their function in discrete areas. However, the construct of function is not observable unless it is broken down into its component parts or concepts.

The construct of “function” may be inferred from observing concepts such as grooming, personal hygiene, and work or asking individuals to appraise their function in discrete areas. However, the construct of function is not observable unless it is broken down into its component parts or concepts. The construct of “self-care” may be conceptually defined as the activities that are necessary to care for one’s bodily functions, whereas it is operationally defined as observations of an individual engaged in bathing, dressing, and grooming or individual appraisal of his or her level of difficulty in performing self-care activities.

The construct of “self-care” may be conceptually defined as the activities that are necessary to care for one’s bodily functions, whereas it is operationally defined as observations of an individual engaged in bathing, dressing, and grooming or individual appraisal of his or her level of difficulty in performing self-care activities. If an investigator is interested in evaluating the adequacy of self-care routines of persons with disabilities who are employed, both self-care routine and employment are variables. Self-care routine may have multiple values of relevance to the investigator, such as the level of dependence, safety and efficiency of performance, and perceived difficulty. The investigator needs to determine which of the potential values that may be attributed to self-care would be of most interest in the inquiry. That is, there are different ways to measure self-care routines, and each approach can lead to a different understanding. Likewise, employment status can have multiple values (e.g., employed vs. not employed, number of hours working outside the home), and the investigator needs to determine how to operationalize this variable to best match the study’s purpose.

If an investigator is interested in evaluating the adequacy of self-care routines of persons with disabilities who are employed, both self-care routine and employment are variables. Self-care routine may have multiple values of relevance to the investigator, such as the level of dependence, safety and efficiency of performance, and perceived difficulty. The investigator needs to determine which of the potential values that may be attributed to self-care would be of most interest in the inquiry. That is, there are different ways to measure self-care routines, and each approach can lead to a different understanding. Likewise, employment status can have multiple values (e.g., employed vs. not employed, number of hours working outside the home), and the investigator needs to determine how to operationalize this variable to best match the study’s purpose. In the study of adequacy of self-care routines of employed individuals with disabilities, employment status represents the independent variable, whereas level of difficulty performing self-care routine represents the dependent variable. Intervening variables in this study may include any other variable that potentially influences either the independent or the dependent variable, such as family support, type and degree of disability, motivational status, and functional ability.

In the study of adequacy of self-care routines of employed individuals with disabilities, employment status represents the independent variable, whereas level of difficulty performing self-care routine represents the dependent variable. Intervening variables in this study may include any other variable that potentially influences either the independent or the dependent variable, such as family support, type and degree of disability, motivational status, and functional ability. In a positive relationship, the researcher may hypothesize that the employed individual with a strong disability identity has greater self-esteem than the unemployed individual with a strong disability identity. Thus the hypothesis tests the positive movement of both variables.

In a positive relationship, the researcher may hypothesize that the employed individual with a strong disability identity has greater self-esteem than the unemployed individual with a strong disability identity. Thus the hypothesis tests the positive movement of both variables. An individual with a disability who remains employed will demonstrate less difficulty in performing self-care routines than an individual who is unemployed.

An individual with a disability who remains employed will demonstrate less difficulty in performing self-care routines than an individual who is unemployed.

Assume you are a health and human service professional working in a community health center. Your supervisor, the agency administrator, is conducting a survey of worker satisfaction. To obtain information from employees, the supervisor decides to interview people. Knowing that this person is your superior, how likely are you to provide responses to job satisfaction questions, particularly knowing that your performance evaluation is next month?

Assume you are a health and human service professional working in a community health center. Your supervisor, the agency administrator, is conducting a survey of worker satisfaction. To obtain information from employees, the supervisor decides to interview people. Knowing that this person is your superior, how likely are you to provide responses to job satisfaction questions, particularly knowing that your performance evaluation is next month? Using this same example, now consider how questions are asked to obtain the data. Suppose this question is asked: “Don’t you agree that this is a great place to work?” Certainly this question is poorly phrased; it implies a socially and politically correct response and in essence urges the responder to agree. Now consider this question: “Don’t you think that the physical environment and the climate are excellent here?” In this case, the question is confusing and ambiguous. What does “the climate” refer to? Does it signify the weather? The emotional climate? The social climate? In this case, the responder is prompted or guided to produce a socially desirable response. It is also not clear what is being asked.

Using this same example, now consider how questions are asked to obtain the data. Suppose this question is asked: “Don’t you agree that this is a great place to work?” Certainly this question is poorly phrased; it implies a socially and politically correct response and in essence urges the responder to agree. Now consider this question: “Don’t you think that the physical environment and the climate are excellent here?” In this case, the question is confusing and ambiguous. What does “the climate” refer to? Does it signify the weather? The emotional climate? The social climate? In this case, the responder is prompted or guided to produce a socially desirable response. It is also not clear what is being asked. Suppose that only the supervisor’s social friends were selected as the targeted sample to participate in the survey and are viewed as representing the agency population. Such a sample would not represent the overall level of job satisfaction for all employees. In this example, the characteristics of the sample serve as potential bias or influence the outcomes of the study.

Suppose that only the supervisor’s social friends were selected as the targeted sample to participate in the survey and are viewed as representing the agency population. Such a sample would not represent the overall level of job satisfaction for all employees. In this example, the characteristics of the sample serve as potential bias or influence the outcomes of the study.