When the Kolff–Brigham kidney was used, the heparin dose ranged from 6000 to 9000 units, and was infused prior to the start of the treatment. The dialyser was primed with blood, and the blood flow to the dialyser was limited to 200 ml at a time to prevent hypotension. To assist blood flow a pump was inserted in the venous circuit rather than the arterial side, to minimise the probability of pressure buildup in the membrane, which would cause a rupture.

This version of the Kolff–Brigham dialysis machine was used in 1948, and in all, over 40 machines were built and exported all over the world. Orders for spare parts were still being received as late as 1974, from South America and behind the Iron Curtain.

The 1950s

The Allis-Chalmers Corporation was one of the first companies to produce dialysis machines commercially. They were prompted into the manufacture when an employee developed renal failure. There was no machine available and so the firm turned its attention to producing a version of the Kolff rotating drum. The resulting machine was commercially available for $5600 and included all the sophistication available at the time. Allis-Chalmers produced 14 of these machines and sold them all over the United States into the early 1950s.

In October 1956, the Kolff system became commercially available, so the unavailability of equipment could no longer be used as an excuse for nontreatment of patients. Centres purchased the complete delivery system for around $1200 and the disposables necessary for the treatment were around $60. The system was still mainly used for reversible acute renal failure drug overdose and poisoning.

The development of the dialyser

Jack Leonards and Leonard Skeggs produced a plate dialyser, which would permit a reduction in the priming volume, and allow negative pressure to be used to remove fluid from the patient’s system (Skeggs et al. 1949). A modification to this design included a manifold system, which allowed variation of the surface area without altering the blood distribution. Larger dialysers followed, which necessitated the introduction of a blood pump.

In the late 1950s Fredrik Kiil of Norway developed a parallel plate dialyser, with a large surface area (1 m2), requiring a lower priming volume. A new cellulose membrane, Cuprophan, was used and this allowed the passage of larger molecules than other materials that were available at that time. The Kiil dialyser could be used without a pump. Kiil dialysed the patients using their own arterial pressure. This dialyser was widely used because the disposables were relatively inexpensive when compared with other dialysers available at that time.

A crude version of the capillary-flow dialyser, the parallel dialyser, was developed, using a new blood pump, with a more advanced version of the Alwall kidney (MacNeill 1949). However, it was John Guarino who incorporated the important feature of a closed system, a visible blood pathway.

To reduce the size of the dialyser without reducing the surface area, William Y. Inouye and Joseph Engelberg produced a plastic mesh sleeve to protect the membrane. This reduced the risk of the dialysis fluid coming into contact with the blood. This was a closed system, so the effluent could be measured to determine the fluid loss of the patient. It is the true predecessor of the positive-and negative-pressure dialysers used today.

The first commercially available dialyser was manufactured by Baxter and based on the Kolff kidney. It provided a urea clearance of approximately 140 ml/min, equivalent to today’s models, and was based on the coil design. The priming volume was 1200–1800 ml and this was drained into a container at the end of treatment, refrigerated and used for priming for the next treatment. It was commercially available in 1956 at $59.00.

The forerunner of today’s capillary-flow dialyser was produced by Richard Stewart in 1960. The criteria for design of this hollow-fibre dialyser were low priming volume and minimal resistance to flow. The improved design contained 11 000 fibres which provided a surface area of 1 m2.

Future designs for the dialyser focused on refining the solute and water removal capabilities, as well as reducing the size and priming requirements of the device, thus allowing an even higher level of precise individual care.

The emergence of home haemodialysis

It was Scribner’s shunt which provided vascular access, at the start, leading to the first dialysis unit to be established for patients at the University of Washington Hospital. Belding Scribner also developed a central dialysate delivery system for multiple use and set this up in the chronic care centre, which had 12 beds. These beds were quickly taken and his plan for expansion was rejected. The only alternative was to send the patients home, and so the patient and family were trained to perform the dialysis and care for the shunts. Home dialysis was strongly promoted by Scribner.

Stanley Shaldon reported in 1961 that a patient dialysing at the Royal Free Hospital in London was able to self-care by setting up his own machine, initiating and terminating dialysis (Figure 1.2); so home HD in the UK was made possible. The shunt was formed in the leg for vascular access, to allow the patient to have both hands free for the procedures. Hence Shaldon was able to report the results of his first patient to be placed on overnight home HD in November 1964. With careful patient selection, the venture was a success. Scribner started to train patients for home at this time, and his first patient was a teenager assisted by her mother. Home dialysis was selected for this patient, so that she would not miss her high-school education. The average time on dialysis was 14 h twice weekly. To allow freedom for the patient, overnight dialysis was widely practised. At first, emphasis was on selection of the suitable patient and family, even to the extent of a stable family relationship, before the patient could be considered for home training (Baillod et al. 1965).

Figure 1.2 Patient and nurse with dialysis machine and Kiil dialyser, 1968.

Source: With kind permission from Science and Society Picture Library.

From these beginnings, large home HD programmes developed in the United States and the United Kingdom, thus allowing expansion of the dialysis population without increasing hospital facilities. Many patients could now be considered for home treatment, often with surprisingly good results, as the dialysis could be moulded to the requirements of the individual, rather than the patients conforming to a set pattern. However, with the development in the late 1970s and early 1980s of CAPD as the first choice for home treatment, the use of home HD steadily dwindled. It is now however seeing renewed interest. The National Institute for Clinical Excellence (NICE) has published guidance on home versus hospital haemodialysis (National Institute for Clinical Excellence 2002) and recommends all suitable patients should be offered the choice between home haemodialysis or haemodialysis in a hospital/satellite unit.

Vascular access for haemodialysis

It was Sir Christopher Wren, of architectural fame, who in 1657 successfully introduced drugs into the vascular system of a dog. In 1663, Sir Robert Boyle injected successfully into humans. Prison inmates were the subjects and the cannula used was fashioned from a quill. For HD to become a widely accepted form of treatment for renal failure, a way to provide long-term access to the patient’s vascular system had to be found and until this problem was solved, long-term treatment could not be considered. In order for good access to be established, a tube or cannula had to be inserted into an artery or vein, thus giving rise to good blood flow from the patient. The repeated access for each treatment quickly led to exhaustion of blood vessels for cannulation. The need for a system whereby a sufficiently large blood flow could be established for dialysis, without destroying a length of blood vessel every time dialysis was required, was imperative.

In the 1950s, Teschan, in the 11th Evacuation Hospital in Korea, was responsible for developing a method of heparin lock for continuous access to blood vessels. The cannulae were made from Tygon tubing and stopcocks, and the blood was prevented from clotting by irrigation with heparinised saline. It was not a loop design, as the arterial and venous segments were not joined together.

In 1960, in the United States, George Quinton, an engineer, and Belding Scribner, a physician, made use of two new synthetic polymers – Teflon and Silastic – and, using the tubing to form the connection between a vein and an artery, were able to reroute the blood outside the body (usually in the leg). This was known as the arteriovenous (AV) shunt. The tubing was disconnected at a union joint in the centre, and each tube then connected to the lines of the dialysis machine. At the end of treatment, the two ends were then reconnected, establishing a blood flow from the artery to the vein outside the body. In this way, repeat dialysis was made possible without further trauma to the vascular system.

This external shunt, whilst successful, had drawbacks. It was a potential source of infection, often thrombosed, and had a restrictive effect on the activity of the patient. This form of access is still occasionally used for acute treatment, although the patient’s potential requirements for chronic treatment must be considered when the choice of vessels is made, so that vessels to be used in the formation of an AV fistula are not scarred. In 1966, Michael Brescia and James Cimino developed the subcutaneous radial artery-to-cephalic vein AV fistula (Cimino and Brescia 1962), with Cimino’s colleague, Kenneth Appel, performing the surgery.

The AV fistula required less anticoagulation, had reduced infection risk and gave access to the blood stream without danger of shunt disconnection. Subsequently, a number of synthetic materials have been introduced to create internal AV fistulae (grafts). These are useful when the patient’s veins are not suitable to form a conventional AV fistula, such as in severe obesity, with loss of superficial veins due to repeated cannulation or in the elderly or those with diabetes.

Venous access by cannulation of the jugular or femoral veins has now replaced the shunt for emergency dialysis.

The present

Monitoring and total control of the patient’s therapy became more important as dialysis became widespread, and so equipment development has continued. Sophisticated machines incorporated temperature monitoring, positive-pressure gauges and flow meters. Negative-pressure monitoring followed, as did a wide range of dialysers with varying surface areas, ultrafiltration capabilities and clearance values. Automatic mixing and delivery of the dialysate and water supply to the machine greatly increased the margin of safety for the procedure, and made the dialysis therapy much easier to manage. The patient system that has evolved provides a machine that monitors all parameters of dialysis through the use of microprocessors, allowing the practitioner to programme a patient’s requirements (factors such as blood flow, duration of dialysis and fluid removal) so that the resulting treatment is a prescription for the individual’s needs. Average dialysis time has been reduced to 4 h, three times weekly or less if a high-flux (high-performance) dialyser is used.

The early 1970s saw the overall number of patients on RRT increase due to the increased awareness brought about by the availability of treatment. Free-standing units for the sole use of kidney dialysis came into being, leading to dialysis becoming a full-time business. Committees for patient selection were disbanded, and the problems concerned with inadequate financial resources came to the fore. Standards for treatment quality have now been set. Attempts continue to reduce treatment duration, to enhance the patient’s quality of life. Good nutrition has also emerged as playing a vital role in reducing dialysis morbidity and mortality. Dialysis facilities are demanded within easy reach of patients’ homes, and this expectation has led to the emergence of small satellite units, managed and monitored by larger units, as a popular alternative to home HD treatment. In 2010 the number of patients receiving home HD increased by 23%, from 636 patients to 780 patients since 2009 (UK Renal Registry 2011).

Peritoneal Dialysis (PD)

Peritoneal dialysis as a form of therapy for kidney disease has been brought about as a result of the innovative efforts and the tenacity of many pioneers over the past two centuries. It was probably the early Egyptian morticians who first recognised the peritoneum and peritoneal cavity as they embalmed the remains of their influential compatriots for eternity. The peritoneal cavity was described in 3000 BC in the Ebers papyrus as a cavity in which the viscera were somehow suspended. In Ancient Greek times, Galen, a physician, made detailed observations of the abdomen whilst treating the injuries of gladiators.

The earliest reference to what may be interpreted as PD was in the 1740s when Christopher Warrick reported to the Royal Society in London that a 50-year-old woman suffering from ascites was treated by infusing Bristol water and claret wine into the abdomen through a leather pipe (Warrick 1744). The patient reacted violently to the procedure, and it was stopped after three treatments. The patient is reported to have recovered, and was able to walk 7 miles (approximately 13 km) a day without difficulty. A modification of this was subsequently tried by Stephen Hale of Teddington in England: two trocars were used – one on each side of the abdomen – allowing the fluid to flow in and out of the peritoneal cavity during an operation to remove ascites (Hale 1744).

Subsequent experiments on the peritoneum (Wegner 1877) determined the rate of absorption of various solutions, the capacity for fluid removal (Starling and Tubby 1894) and evidence that protein could pass through the peritoneum. It was also noted that the fluid in the peritoneal cavity contained the same amount of urea that is found in the blood, indicating that urea could be removed by PD (Rosenberg 1916). This was followed by Tracy Putnam suggesting that the peritoneum might be used to correct physiological problems, when he observed that under certain circumstances fluids in the peritoneal cavity can equilibrate with the plasma and that the rate of diffusion was dependent on the size of the molecules. Research also suggested at this time that the clearance of solutes was proportional to their molecular size and solution pH, and that a high flow rate maximised the transfer of solutes, which also depended on peritoneal surface area and blood flow (Putman 1923).

George Ganter was looking for a method of dialysis that did not require the use of an anticoagulant (Ganter 1923). He prepared a dialysate solution containing normal values of electrolytes and added dextrose for fluid removal. Bottles were boiled for sterilisation and filled with the solution, which was then infused into the patient’s abdomen through a hollow needle.

The first treatment was carried out on a woman who was suffering acute kidney injury following childbirth. Between 1 and 3 L of fluid were infused at a time, and the dwell time was 30 min to 3 h. The blood chemistry was reduced to within acceptable limits. The patient was sent home, but unfortunately she died, as it was not realised that it was necessary to continue the treatment in order to keep the patient alive.

Ganter recognised the importance of good access to the peritoneum, as it was noted that it was easier to instil the fluid than it was to attain a good return volume. He was also aware of the complication of infection, and indeed it was the most frequent complication that he encountered. Ganter identified four principles, which are still regarded as important today:

- There must be adequate access to the peritoneum.

- Sterile solutions are needed to reduce infection.

- Glucose content of the dialysate must be altered to remove greater volumes of fluid.

- Dwell times and fluid volume infused must be varied to determine the efficiency of the dialysis.

There are reports of 101 patients treated with PD in the 1920s (Abbott and Shea 1946; Odel et al. 1950). Of these, 63 had reversible causes, 32 irreversible and in two the diagnosis was unknown. There was recovery in 32 of 63 cases of reversible renal failure. Deaths were due to uraemia, pulmonary oedema and peritonitis.

Stephen Rosenak, working in Europe, developed a metal catheter for peritoneal access, but was discouraged by the results because of the high incidence of peritonitis. In Holland, P.S.M. Kop, who was an associate of Kolff during the mid-1940s, created a system of PD by using materials for the components that could easily be sterilised: porcelain containers for the fluid, latex rubber for the tubing, and a glass catheter to infuse the fluid into the patient’s abdomen. Kop treated 21 patients and met with success in ten.

Morton Maxwell, in Los Angeles, in the latter part of the 1950s, had been involved with HD, and it was his opinion that HD was too complicated for regular use. Aware of the problems with infection, he designed a system for PD with as few connections as possible. Together with a local manufacturer, he formulated a peritoneal solution, and customised a container and plastic tubing set and a single polyethylene catheter. The procedure was to instil 2 L of fluid into the peritoneum, leave it to dwell for 30 min, and return the fluid into the original bottles. This would be repeated until the blood chemistry was normal. This technique was carried out successfully on many patients and the highly regarded results were published in 1959. This became known as the Maxwell technique (Maxwell et al. 1959). This simple form of dialysis recognised that it was no longer necessary to have expensive equipment with highly specialised staff in a large hospital to initiate dialysis. All that was required was an understanding of the procedure and available supplies.

The catheter

Up to the 1970s, PD was used primarily for patients who were not good candidates for HD, or who were seeking a gentler form of treatment. Continuous flow using two catheters (Legrain and Merrill 1953) was still sometimes used, but the single-catheter technique was favoured because of lower infections rates.

The polyethylene catheter was chosen by Paul Doolan (Doolan et al. 1959) at the Naval Hospital in San Francisco when he developed a procedure for the treatment to use under battlefield conditions in the Korean war. Because of the flexibility of the catheter, it was considered for long-term treatment. A young physician called Richard Ruben decided to try this procedure, known as the Doolan technique (Ruben et al. unpublished work), on a female patient who improved dramatically, but deteriorated after a few days without treatment. The patient was therefore dialysed repeatedly at weekends, and allowed home during the week, with the catheter remaining in place. This was the first reported chronic treatment using a permanent indwelling catheter.

Catheters were made from tubing available on the hospital ward and included gallbladder trocars, rubber catheters, whistle-tip catheters and stainless-steel sump drains. However, as with the polyethylene plastic tubes, the main trouble was kinking and blockage. Maxwell described a nylon catheter with perforations at the curved distal end and this was the catheter which became commercially available. Advances in the manufacture of the silicone peritoneal catheter by Palmer (Palmer et al. 1964) and Gutch (Gutch 1964) included the introduction of perforations at the distal end and later Tenckhoff included the design of a shorter catheter, a straight catheter and a curled catheter. He also added the Dacron cuff, either single or double, to help to seal the openings through the peritoneum (Tenckhoff and Schechter 1968). He was also responsible for the introduction of the trocar that gave easy placement of the catheter. Dimitrios Oreopoulos, a Greek physician, was introduced to PD in Belfast, Northern Ireland, during his training and he noted the difficulties encountered with the catheters there. He had been shown a simple technique for inserting the catheter by Norman Dean from New York City, which allowed the access to be used repeatedly.

Peritoneal dialysis at home

In 1960, Scribner and Boen (Boen 1959) set up a PD programme that would allow patients to be treated at home. An automated unit was developed which could operate unattended overnight. The system used 40 L containers that were filled and sterilised at the University of Washington. The bottles were then delivered to the patient’s home, and returned after use. The machine was able to measure the fluid in and out of the patient by a solenoid device. An indwelling tube was permanently implanted into the patient’s abdomen, through which a tube was inserted for each dialysis treatment. The system was open, and therefore was vulnerable to peritonitis. A new method was then used, whereby a new catheter was inserted into the abdomen for each treatment, and removed after the treatment ended. This was still carried out in the home, when a physician would attend the patient at home for insertion of the catheter, leaving once the treatment had begun. The carer was trained to discontinue the treatment and remove the catheter. The wound was covered by a dressing, and the patient would be free of dialysis until the next week. This treatment was carried out by Tenckhoff et al. (1965) in a patient for 3 years, requiring 380 catheter punctures.

The large 40 L bottles of dialysate were difficult to handle and delivery to the home and sterilisation were not easy. Tenckhoff, at the University of Washington, installed a water still into the patient’s home, thus providing a sterile water supply. The water was mixed with sterile concentrate to provide the correct solution, but this method was not satisfactory as it remained cumbersome and dangerous due to the high pressure in the still. Various refinements were tried using this method, including a reverse osmosis unit, and this was widely used later for HD treatment.

Lasker, in 1961, realised the potential of this type of treatment and concentrated on the idea of a simple version by instilling 2 L of fluid by a gravity-fed system. This proved to be cheaper to maintain but was labour-intensive. Later that year, he was approached by Ira Gottscho, a businessman who had lost a daughter through kidney problems, and together they designed the first peritoneal cycler machine. The refinements included the ability to measure the fluid in and out, and the ability to warm the fluid before the fill cycle. Patients were sent home using the automated cycler treatment as early as 1970, even though there was a bias for HD at that time.

In 1969, Oreopoulos accepted a position at the Toronto Western Hospital and, together with Stanley Fenton, decided to use the Tenckhoff catheter for long-term treatment. Because of a lack of space and facilities at the hospital, it was necessary to send the patients home on intermittent PD. He reviewed the Lasker cycler machine and ordered a supply, and by 1974 was managing over 70 patients on this treatment at home. Similar programmes were managed in Georgetown University and also in the Austin Diagnostic Clinic in the USA.

The beginning of continuous ambulatory peritoneal dialysis (CAPD)

It was in 1975, following an unsuccessful attempt to haemodialyse a patient at the Austin Diagnostic Clinic, that an engineer, Robert Popovich, and Jack Moncrief became involved in working out the kinetics of ‘long-dwell equilibrated dialysis’ for this patient. It was determined that five exchanges each of 2 L per day would achieve the appropriate blood chemistry, and that the removal of 1–2 L of fluid from the patient was needed per day. Thus came the evolution of CAPD (Popovich et al. 1976).

The treatment was so successful that the Austin group was given a grant to allow it to continue dialysing patients with CAPD. Strangely, the group’s first description and account of this clinical experience was rejected by the American Society for Artificial Internal Organs. At this time the treatment was called ‘a portable/wearable equilibrium dialysis technique’. The stated advantages compared to HD included:

- good steady-state biochemical control;

- more liberal diet and fluid intake;

- improvement in anaemia.

The main problems were protein loss (Popovich et al. 1978) and infection. It was recognised that the source of infection was almost certainly related to the use of the bottles. Oreopoulos found that collapsible polyvinylchloride (PVC) containers for the solution were available in Canada. Once the fluid was instilled, the bag could then be rolled up and concealed under the clothing. The fluid could be returned into the bag during draining by gravity, without a disconnection taking place (Oreopoulos et al. 1978). New spike connections were produced for access to the bag of fluid, and a Luer connection for fitting to the catheter, together with tubing devised for HD, greatly reduced the chances of infection. The patients treated on an intermittent basis (by intermittent peritoneal dialysis or IPD) were rapidly converted to CAPD and evaluation of the new treatment was rapid, due to the large numbers being treated. Following approval by the US Food and Drug Administration, many centres were then able to develop CAPD programmes.

The first complete CAPD system was released on to the market in 1979, giving a choice of three strengths of dextrose solution. Included in this system were an administration line, and sterile items packed together to form a preparation kit, to be used at each bag change in an attempt to keep infection at bay. The regime proposed by Robert Popovich and Jack Moncrief entailed four exchanges over a 24-hour period, three dwell times of approximately 4 h in the daytime, and one dwell overnight of 8 h. This regime is the one often used today.

The systems are continually being improved, with connectors moving from spike to Luer to eliminate as far as possible the accidental disconnection of the bag from the line. A titanium connector was found to be the superior form of adaptor for connection of the transfer set to the catheter, and probably led to a reduced infection rate for peritonitis. A disadvantage of this technique is the flow of fresh fluid down the transfer set along the area of disconnection, thus encouraging any bacteria from the disconnection to be instilled into the abdomen. The development of the Y-system (flush before fill) in the mid-1980s in Italy resulted in a further decrease in peritonitis.

Automated peritoneal dialysis

Automated PD in the form of continuous cyclic peritoneal dialysis (CCPD) was further developed by Diaz-Buxo in the early 1980s to enable patients who were unable to perform exchanges in the day to be treated with PD overnight.

Advances in peritoneal dialysis for special needs

Even more recently advances in CAPD treatment include a dialysate that not only provides dialysis but which contains 1.1% amino acid, to be administered to malnourished patients. This is particularly useful for the elderly on CAPD, in whom poor nutrition is a well-recognised complication.

Those with diabetes were initially not considered for dialysis, because of the complications of the disease. Carl Kjellstrand, at the University of Minnesota, suggested that insulin could be administered to those with diabetes by adding it to the PD fluid (Crossley and Kjellstrand 1971). However, when this suggestion was first put forward, it was not adopted because the 30 min dwell did not give time for the drug to be absorbed into the patient. It became viable later, when the long dwell dialysis was initiated, and this gave the advantage of slow absorption, resulting in a steady state of blood sugar in the normal range, thus alleviating the need for painful injections (Flynn and Nanson 1979).

The realisation that patients are individuals, bringing their own problems associated with training, brought many exchange aid devices on to the market to assist the patient in the exchange procedure. These exchange devices were mainly used to assist such disabilities as blindness, arthritis (particularly of the hands) and patients prone to repeated episodes of peritonitis.

The future

In some centres PD is usually the first choice of treatment for established renal disease. In many countries, because of the lack of facilities for in-centre HD, it will remain so. However, the relative prevalence of PD in the United Kingdom has been declining since its peak in the early 1990s, possibly because of the availability of satellite haemodialysis and a high level of PD technique failure. In 2010 only 18.1% of people who required RRT were on peritoneal dialysis (at 90 days after starting dialysis) compared with 68.3% of patients on haemodialysis (UK Renal Registry 2011).

Transplantation

In the beginning

Kidney transplantation as a therapeutic and practical option for renal replacement therapy (RRT) was first reported in published literature at the turn of the twentieth century. The first steps were small and so insignificant that they were overlooked or condemned.

The first known attempts at renal transplantation on humans were made without immunosuppression between 1906 and 1923 using pig, sheep, goat and subhuman primate donors (Elkington 1964). These first efforts were conducted in France and Germany but others followed. None of the kidneys functioned for long, if indeed at all, and the recipients all died within a period of a few hours to nine days later.

Of all the workers at this time, the contribution made by Alixis Carrel (1873–1944) remains the most famous. His early work in Lyons, France and in Chicago involved the transplantation of an artery from one dog to another. This work later became invaluable in the transplantation of organs. In 1906, Carrel and Guthrie, working in the Hull Laboratory in Chicago, reported the successful transplantation of both kidneys in cats and later a double nephrectomy on dogs, reimplanting only one of the kidneys. He found that the secretion of urine remained normal and the animal remained in good health, despite having only one kidney (Carrel 1983). Carrel was awarded the Nobel Prize in 1912 for his work on vascular and related surgery.

While at this stage there was no clear understanding of the problem, some principles were clearly learned. Vascular suture techniques were reviewed and the possibility of using pelvic implantation sites was investigated and practised. No further renal heterotransplantations (animal to human) were tried until 1963 when experiments using kidneys from chimpanzee (Reemtsma et al. 1964) and baboon were tried, with eventual death of the patients. This ended all trials using animal donation.

The first human-to-human kidney transplant was reported in 1936, by the Russian Voronoy, when he implanted a kidney from a cadaver donor of B-positive blood type into a recipient of O-positive blood type, a mismatch that would not be attempted today. The donor had died 6 h before the operation and the recipient died 6 h later without making any urine. The following 20 years saw further efforts in kidney transplantation, all without effective immunosuppression (Groth 1972). The extraperitoneal technique developed by French surgeons Dubost and Servelle became today’s standard procedure.

The first successes

The first examples of survival success of a renal transplant can probably be attributed to Hume. Hume placed the transplanted kidney into the thigh of the patient, with function for 5 months. Then at the Peter Bent Brigham Hospital in Boston, USA, in December 1954, the first successful identical-twin transplant was performed by the surgeon Joseph E Murray in collaboration with the nephrologist John P Merrill (Hume et al. 1955). The recipient survived for more than 2 decades. The idea of using identical twins had been proposed when it was noted by David C Miller of the Public Health Service Hospital, Boston, that skin grafts between identical twins were not rejected (Brown 1937). The application of this information resulted in rigorous matching, including skin grafting, prior to effective immunosuppression.

Over the period between 1951 and 1976 there were 29 transplants performed between identical twins, and the survival rate for 20 years was 50%. Studies of two successfully transplanted patients, who were given kidneys from their nonidentical twins, were also reported (Merrill et al. 1960). The first survived 20 years, dying of heart disease, and the second 26 years, dying of carcinoma of the bladder. Immunosuppression used in these cases was irradiation.

Immunosuppression

It was Sir Peter Medawar who appreciated that rejection is an immunological phenomenon (Medawar 1944), and this led to research into weakening the immune system of the recipients to reduce the rejection. In animals, corticosteriods, total body irradiation and cytotoxic drug therapy were used. Experiments in animals were still far from successful, as were similar techniques when used in humans. It was concluded that the required degree of immunosuppression would lead to destruction of the immune system and finally result in terminal infections.

A few patients were transplanted between 1960 and 1961 in Paris and Boston, using drug regimens involving 6-mercaptopurine or azathioprine, with or without irradiation. They all died within 18 months. Post-mortem examination of failed kidney grafts showed marked changes in the renal histology, which at first were thought unlikely to be due to immunological rejection, but later it was convincingly shown that this was indeed the underlying process.

In the early days of kidney transplantation, the kidney was removed from either a living related donor or a cadaver donor and immediately transferred to the donor after first flushing the kidney with cold electrolyte solution such as Hartman’s solution. In 1967, Belzer and his colleagues developed a technique for continuous perfusion of the kidney using oxygenated cryoprecipitated plasma, which allowed the kidney to be kept up to 72 h before transplantation. This machine perfusion required constant supervision, and it was found that flushing the kidney with an electrolyte solution and storage at 0°C in ice saline allowed the kidney to be preserved for 24 h or more (Marshall et al. 1988). This was a major development in transplantation techniques.

During the 1950s it was recognised that many of the survivors of the Hiroshima atomic bomb in 1945 suffered impairment to their immune system. It was concluded that radiation could therefore induce immunosuppression, and clinical total body irradiation was used to prolong the survival of renal transplants in Boston in 1958. This did improve the survival of some transplants; however, the overall outcomes were poor. There was clearly a need for a more effective form of immunosuppression than irradiation.

A breakthrough in immunosuppressive therapy occurred in 1962 in the University of Colorado, when it was discovered that the combination of azathioprine and prednisone allowed the prevention and in some cases reversal of rejection (Starzl et al. 1963). Transplantation could at last expand. A conference sponsored by the National Research Council and National Academy of Sciences in 1963 in Washington resulted in the first registry report, which enabled the tracing of all the early non-twin kidney recipients. In 1970, work commenced on the development of ciclosporin by Sandoz in Basle, Switzerland, following recognition of the potential (Borel et al. 1976). Clinical trials carried out in Cambridge, United Kingdom (Calne et al. 1979) showed that outcomes on renal transplantation were greatly improved, both with graft and with patient survival. Ciclosporin revolutionised immunosuppression treatment for transplant patients, even though it is itself nephrotoxic and its use needs close monitoring.

Tissue typing is a complex procedure and, as yet, is far from perfect. The use of the united networks for organ sharing has increased the efforts of matching donor and recipient, and data available from these sources show a significant gain in survival of well-matched versus mismatched cadaver kidneys. Cross-matching remains as important today as it was at its conception. None of the immunosuppressive measures available today can prevent the immediate destruction of the transplanted organ by humoral antibodies in the hyperacute rejection phase. This was recognised as early as 1965 (Kissmeyer-Neilsen et al. 1966), and it may be that this phenomenon holds the key to the future of successful heterotransplantation.

Blood transfusions

It was observed in the late 1960s that patients who had received multiple blood transfusions before organ transplantation did not have a poorer graft survival than those who had not been transfused. Opelz and Terasaki (1974) observed that patients who had received no transfusions whatsoever were more likely to reject the transplant. It became evident, therefore, that a small number of blood transfusions resulted in an improved organ survival and so the transfusion policies for nontransfused recipients were changed throughout the world. This ‘transfusion effect’ is of much less importance with the ciclosporin era.

Present

Renal transplantation has been a dramatic success since the 1980s, with patient survival rates not less than 97% after 6 months’ transplantation for both living related and cadaver transplants. Although short-term survival for the graft is good – around 90% expectation of survival of the graft at 1 year – cadaveric graft survival at 5 years is around 70%, with a substantial minority losing their renal graft after 15 years.

The future

There is no doubt that renal transplantation is the treatment of choice for many patients requiring RRT giving, in general, a better quality of life than dialysis. However, organs are in short supply. A change in the law may result in more kidneys becoming available for transplantation, by allowing easier access to donors or removing the need for relatives’ consent, but until a significant breakthrough is achieved there will continue to be a waiting list for transplantation and it will be necessary to continue to improve the techniques of dialysis. However, the number of people waiting for a kidney transplant has dropped slightly in recent years (down from in 6918 in 2009 to 6300 in 2012) whilst the number of kidney transplants has increased, with 2330 being carried out in 2008/2009 to 2568 being carried out in 2011/2012 (NHS Blood and Transplant 2012). Reasons for this increase are discussed further in Chapter 13.

Summary

Dialysis has come a long way from the small beginnings of the hot baths in Rome. Refinements and improvements continue, most recently with the emergence of erythropoietin in the late 1980s to correct the major complication of anaemia in people with ERF. The challenge of adequate dialysis for all who need treatment remains.

Transplantation is still not available for all those who are eligible, and changes in the law may help with availability of donor organs. The multidisciplinary teams will continue to strive to give patients the best possible quality of treatments until a revolutionary breakthrough in prevention of ERF happens.

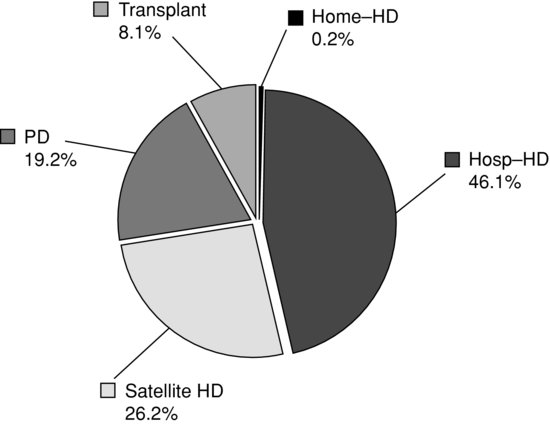

In the United Kingdom, the growth rate for RRT appears to be slowing, as the UK Renal Registry (2011) reported that from 2009 to 2010 there was an increase of 1.5% for haemodialysis (HD), a fall of 3.2% for peritoneal dialysis (PD) and an increase of 5.4% with a functioning transplant. The number of patients receiving home HD increased by 23%, from 636 patients to 780 patients since 2009 (Renal Registry 2011). See Figure 1.3.

Figure 1.3 Renal replacement therapy modality in the UK at day 90 (2009–2010).

Source:The data reported here have been supplied by the UK Renal Registry of the Renal Association. The interpretation and reporting of these data are the responsibility of the authors and in no way should be seen as an official policy or interpretation of the UK Renal Registry or the Renal Association.

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree