CHAPTER 8 Undertaking a program evaluation

After reading this chapter, you should be able to:

8.3 Definitions

Program evaluation involves the process of conducting a systematic appraisal of a particular activity for the purpose of generating knowledge and planning future strategies. Another term that is sometimes used for program evaluation is outcomes research. A number of definitions of program evaluation exist. Green (1990), who refers to the importance of benchmarking, defines program evaluation as comparing an activity of interest with a standard of acceptability. This definition is useful because it includes the process, impact and outcomes that can be examined, and facilitates a range of standards of acceptability. Evaluation must be congruent with the goals and objectives of the program activity and the measurement of these activities needs to be appropriate. Evaluation is also a continuous process of asking questions, thinking about the responses, and reviewing the planned strategy and activity (Fleming & Parker 2007).

Several other parameters are important in undertaking a program evaluation; they include the program’s goals, outcomes, strategies, objectives, performance indicators and performance measures. These parameters are explained in Table 8.1. These terms are interpreted in different ways. Government organisations and funding bodies often use their own specific definitions of these terms, and it is important to use the definitions provided by these instrumentalities to make sure that particular components are addressed (Department of Health and Aged Care 2001).

Table 8.1 Parameters in a program evaluation

| Term | Meaning |

|---|---|

| Goal | The aim that the initiative seeks to achieve in general |

| Outcome | The changes in attitude, behaviour, health condition or status that the initiative seeks to achieve. It also concerns learning outcomes in relation to process, knowledge and skills |

| Strategy | The plan used to achieve the desired outcomes |

| Objective | The specific targets that need to be accomplished in an effort to achieve the outcomes |

| Performance indicator | The responses or measured changes in attitude, behaviour, health condition or status that indicate progress towards objectives and outcomes |

| Performance measure | The way in which changes in attitude, behaviour, health condition or status will be measured |

8.4 Forms of program evaluation

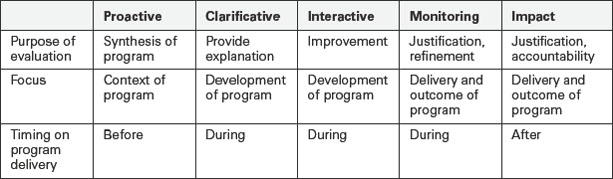

Program evaluation is classified according to the purpose of the intended activities. There are five forms of evaluation that underpin the various roles of evaluative activities: proactive, clarificative, interactive, monitoring, and impact (Owen & Rogers 1999). Table 8.2 shows the basic elements of each form, including the purpose, focus and timing for program delivery. It is important to note that within a program evaluation one might also use aspects of clinical audit or evidence-based guideline review, so it is advisable to make yourself familiar with the contents of Chapters 4 and 7.

8.4.1 Proactive evaluation

Proactive evaluation takes place before the program commences. Its purpose is to provide evidence of what is known about the issue, and to use this information in deciding how to develop a program. It also provides managers with details on how an organisation needs to change to improve its effectiveness (Owen & Rogers 1999, Scheirer 1998).

Some of the characteristic questions of this form include:

Three main approaches are used with this form of analysis: needs assessment, review of available literature, and review of best practice guidelines. A needs assessment addresses the problems or conditions of a particular community or organisation that should be included in future planning. It usually occurs when individuals are concerned about the present situation and type of service delivery. This approach provides valuable information about the initiatives that could be implemented to improve the situation in the future. A needs assessment is helpful in setting priorities for healthcare and allocating scarce resources. In addressing a need for a particular program, evaluators are concerned about differences between the current and desired situations, thereby establishing discrepancies. Reasons are sought for these discrepancies and decisions can be made about which needs should be given priority for action (Roth 1990).

Data collection methods employed in proactive evaluation involve review of documents and databases, the Delphi technique, strategic planning meetings and focus groups. Examples of documents and databases that may be reviewed include hospital data such as morbidity, mortality and length of hospital stay (Centers for Disease Control and Prevention 1999). The Delphi technique requires individuals to work independently and to pool their written ideas about a particular issue. Each individual is given a list of the collected ideas, which are formatted into a set of scaled items, and asked to assess their relative importance and relevance. Strategic planning meetings are events designed to provide direction for a projected program. Individuals involved in delivering the program collaborate to consider barriers and facilitators to achieving certain activities, what they have achieved and what they wish to achieve in the future. The focus group is a method that aims to collect information from a selected group of people. While the goal of the Delphi technique is to achieve consensus, the desired outcome of focus groups is to obtain a range of views.

The following case studies are provided to highlight how proactive evaluation uses literature review and evidence-based guideline review to inform the program of work to be implemented (see Ch 4 on developing evidence-based guidelines).

CASE STUDY Review of available literature and best practice guidelines

Bucknall et al (2001) examined best practice guidelines for acute pain against past research on pain. The paper evaluated the implications of the National Health and Medical Research Council (NHMRC) acute pain guidelines on nursing practice, and addressed the inadequacies of current implementation policies of hospitals. The authors argued that the NHMRC pain management guidelines failed to decrease patients’ pain because healthcare professionals, organisations and researchers largely ignored the impact of contextual issues on clinical decision making. Contextual issues included patient involvement and control, nurses’ pain assessment and management skills, multidisciplinary collaboration, organisational management, educational needs and evaluation of pain-management effectiveness. The authors recommended that future pain management programs should consider acknowledging contextual issues in their development and implementation.

CASE STUDY Review of available literature and hospital protocols

With increasing technology and medication complexity, hospital policies and protocols are helpful in supporting nurses to integrate new knowledge into practice and to guide effective decision making. Manias et al (2005) examined how newly graduated nurses used hospital protocols in their medication management activities. While some protocols were developed from evidence-based guidelines, such as checking the patient’s identity before medication administration, others were derived from what was considered to be good practice in the hospital, such as double checking of opiate medications. Newly graduated nurses were observed and interviewed on how they carried out various medication protocols. These protocols included: checking the patient’s identity before administering medication; double checking the administration of parenteral or opioid medications with another nurse; checking the identity band of the patient against the identity label on the medication order; writing or initiating medication incident reports if a medication error occurred; following up of unclear medication orders with an experienced doctor or nurse; checking unknown medications using written medication resources; and observing patients actually consuming oral medications. Some protocols were observed to be followed according to expected recommendations. There were 34 situations observed where nurses were confronted with an unclear medication order, and in all of these situations they sought advice from an experienced doctor or nurse. Nurses observed patients taking oral medications in 90% of situations (118/131), and in 80% of situations they administered parenteral or opioid medications using a double checking procedure with other nurses (24/30). They were also observed to follow up on unknown medications in 86% of situations (12/14). There were two medication errors associated with medication administration; however, these were not documented as medication incidents. In addition, patients’ identity labels were only checked in 27% of situations (48/175). The study demonstrated that evaluation of protocol adherence can be a useful means of determining the extent to which nurses provide safe medication management.

8.4.2 Clarificative evaluation

Clarificative evaluation is to examine the underlying structure, rationale and function of a program. It focuses on the internal components of a program rather than the way in which the program is implemented. The need for clarification may occur when there are conflicts over components of a program’s design. Individuals may also require further details about how the program activities link with intended outcomes (Owen & Rogers 1999).

Characteristic questions associated with this form of evaluation include:

Two main approaches are used in this form of evaluation: program logic development and accreditation (Owen & Rogers 1999). Program logic development involves examining available documentation and conducting interviews with relevant stakeholders to construct an overview of what the program is intended to do. It also considers how the current program could be changed to address the intended outcomes.

The following case studies are provided to highlight how clarificative evaluation examines underlying structure, rationale and function to determine whether the design of the program met the needs of the program participants.

CASE STUDY Program logic development 1

Manias et al (2000) described the benefits of combining objective and naturalistic methods when undertaking a formative evaluation of a computer-assisted learning program in pharmacology for nursing students. During the design and development phases, the pharmacology program was evaluated using observation of student pairs, student questionnaires and student focus group interviews. The combination of evaluation methods enabled complex issues underlying the program effectiveness to be addressed.

CASE STUDY Program logic development 2

Ellis and Hogard (2003) evaluated a pilot scheme for clinical facilitators in acute medical and surgical wards. The clinical facilitators were employed to address two issues: i) the nursing skills demonstrated by newly graduated nurses, and ii) concerns about supervision in clinical placement. The purpose of the clinical facilitators was to enhance undergraduate nurses’ competence on clinical placement. Evaluation involved a three-phase approach covering outcomes, process and multiple stakeholder views. The work of clinical facilitators was evaluated using interviews, focus groups and questionnaires with students, clinical staff and university staff. While clinical facilitators were evaluated in a positive way, concerns were raised about communication. University staff also tended to rate the clinical facilitators less favourably than did students and clinical staff.

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree