Introduction

Nurses must be able to communicate with patients, carers and health care professionals on a daily basis. Therefore, having a good understanding of the nature of communication as well as the importance of communication is essential for building trust within the nurse–patient relationship. In discussing the nature of communication, we have used the benchmark standards from the UK Department of Health ‘Essence of care’ (Department of Health 2003) framework within the context of nursing and health care. To help you develop the necessary knowledge you will have an introduction to the related physiology of hearing, sight and speech along with an exploration of the psychosocial importance of communication. Communication in nursing practice can be complex and challenging to the novice and we shall be discussing some strategies that you may like to develop and use with different patient/client groups. Keeping accurate records is essential to ensure continuity in care delivery and so we shall discuss record-keeping as well as the use of information technology and its importance in communication.

Communication has three fundamental components, all of which are necessary for it to live up to its name: these are the sender, the receiver and the message (Figure 7.1). This diagram does not fully represent the complexity of interactions in that it depicts communication as a one-way process (Bradley & Edinberg 1990) because the reality is that messages are simultaneously going back and forth between both parties, but it does enable a reasonably simple analysis. The message is encoded into a symbolic representation of the thoughts and feelings of the sender and then, in turn, decoded and interpreted by the receiver. Communication is judged as successful when the received message is close enough to that of the sender. The potential for things going wrong at any of these stages is considerable. The coding of the message is achieved through a number of channels.

|

| Figure 7.1The three components of communication. |

Channels of communication

Because of the power of human language, it is easy to equate the message with words and overlook other ways in which we communicate, such as with our bodies, with posture, with gestures, and with tone of voice and intonation. Non-verbal communication consists of all forms of human communication apart from the purely verbal message (Wainwright 2003). While verbal communication is perceived mainly through the ears, non-verbal communication is perceived mainly through the eyes, although it is occasionally supplemented by other senses.

Humans are the only species to have developed elaborate systematic language and we tend to concentrate on verbal messages. Other species use sound, colour, smell, ritualistic movements, chemical markers and other means to transfer information from one member to another. Scientists are constantly discovering how complex and subtle communication systems are, even in the lowliest of species, and how necessary these systems are for survival. Non-verbal communication is often of a more primitive and unconscious kind than is verbal communication, and powerfully modifies the meaning of words.

In face-to-face communication, verbal and non-verbal channels are open and carrying information. When they give a consistent message (congruence), the receiver is likely to receive the message at face value as being sincere. When there are inconsistencies, non-verbal communication is normally taken to be more reliable than the words spoken (see the example in Case history 7.1). This is summed up in the cliché ‘Actions speak louder than words’. We commonly hear people say ‘It’s not so much what he said, it’s how he said it’, or ‘Even though she’s tough with you, you know she really cares’. The subtlety of human communication arises from the interplay of these various channels. The capacity to be ironic or sarcastic, or to mean the opposite of what is said, depends on the exploitation of this rich resource in human communication.

Case history 7.1

Incongruence in communication

As David prepared to leave the acute psychiatric ward where he had spent the past 3 weeks, everything that he said to the nurse suggested that he was confident about managing his own life after his discharge home. Despite David’s words, the nurse sensed that, far from being confident, David was actually apprehensive about leaving the ward. At first, the nurse was unsure of the basis for this interpretation, but when asked to justify the assessment by a colleague, realized what had been observed. David had avoided eye contact by looking down at the floor, he was hesitant in packing his belongings and, as he turned to leave, his shoulders dropped slightly and he adopted a heavy posture as he walked away. It was the non-verbal communication that reflected his true feelings, and David was given the opportunity to talk about his fears and address the issues involved before being discharged.

Not all communication is face-to-face with all channels open. Consider the variety of communication modes that a nurse might use in the course of a day’s work:

• Rules and procedures.

• Memos.

• Reports.

• Letters.

• Information technology (e-mail, discussion forums, Internet sites).

• Telephone/mobile calls.

• Face-to-face contact.

Written rules of procedure or safety regulations may be referred to as impersonal and faceless, because they are sent from ‘the authorities’ to a generalized person of no particular identity. A memo can have personal or impersonal qualities, depending on whether it is sent from one person to another or is of a general nature. A report is a more formalized method of information-sharing and may be written to selected individuals or for general access. A letter is more commonly a communication between two individuals, the writer having in mind a particular person when it is written, although it can, of course, also be generalized and public.

Information technology is increasingly being used as a method of communication in all walks of life. It may take the form of direct messages, such as electronic mailing systems, or data files that are accessible to others. There are an increasing number of nurse discussion forums which offer topical debate and information on contemporary issues. A telephone call allows for the immediate interaction that is missing from the written word, so that the message is not only in what is being said but also in how it is said. If a telephone message is written down, it is reduced to the written channel only. Only in face-to-face contact are all channels open. That is why, when important matters are being discussed, there is no substitute for face-to-face meetings. Lovers have always known this!

Different modes for different uses

Everyday experience shows that we gradually learn the subtleties of each mode of communication and use them to suit our purposes. Making a complaint about an aspect of one’s health care, for example, is most easily done for some people by writing a letter, so that the complainer is in sole charge of the language used. Another person might prefer the fuller contact of a telephone call but avoid face-to-face encounter. All of this points to the complexity of human communication.

Channels of non-verbal communication

Use of touch

Touch has been said to:

• Connect people.

• Provide affirmation.

• Be reassuring.

• Decrease loneliness.

• Share warmth.

• Provide stimulation.

• Improve self-esteem.

On the other hand, not all touch is interpreted positively, even when so intended by the nurse (Davidhizar & Newman 1997). Because of these potential positive or negative effects, nurses need both to understand touch and to value the ability to use it therapeutically. Touch, in a nurs-ing context, may be either instrumental (which includes all functional touch necessary to carry out physical procedures such as wound dressing or taking a pulse) or expressive (used to convey feelings). Nurses are unusual in that they are ‘licensed touchers’ of relative strangers. This legitimized transgression of the normal social code requires that nurses are sensitive to the reactions of patients.

Cultural uses of touch vary from country to country. Murray & Huelskoetter (1991) noted that cultures such as Italian, Spanish, Jewish, Latin American, Arabian and some South American countries typically have relationships that are more tactile. Watson (1980) identified England, Canada and Germany as countries where touch is more taboo. These cultural differences in social codes heighten further the need for sensitivity when judging if touch is appropriate (see Box 7.1). Whitcher & Fisher (1979) found that the use of therapeutic (expressive) touch by nurses preoperatively produced a positive response to surgery among female patients, but not male patients. There is some evidence that males may perceive touch differently to females in relation to aspects of status or dominance (Henley 1977).

Box 7.1

Points to consider in relation to touch

• Reflect on any recent experiences that involved you touching patients.

• Determine if your touch was instrumental or expressive.

• How sensitive were you to how the patient interpreted your touch?

• Consider the respective age, gender, ethnic background and social class of the patient and yourself.

• How appropriate was your touch?

Proxemics

Proxemics refers to the spatial position of people in relation to others, such as respective height, distance and interpersonal space. Hall (1966) originally identified four different interactive zones for face-to-face contact and considered anything less than 4 feet as intrusive of interpersonal space in most relationships other than intimate ones. The four zones are used differently according to the topic of conversation and the relationships of the participants. Patients in hospital settings may not perceive that they have any real personal space. French (1983) suggested that an area of 2 feet around a patient’s bed and locker could be viewed as personal space. One’s spatial orientation and respective height to the other person are also significant and should be considered when engaging with others.

Posture

Posture conveys information about attitudes, emotions and status. For example, Harrigan et al (2004) suggested that depressed and anxious patients can be identified purely by non-verbal communication. A person who is depressed is more likely to be looking down and avoiding eye contact, with a down-turned mouth and an absence of hand movements. An anxious person may use more self-stroking with twitching and tremor in hands, less eye contact and fewer smiles. Of course, the nurse’s posture carries just as many social messages. The ideal attending posture to convey that one is listening has been variously described in the literature (Egan 1998).

Kinesics

This includes all body movements and mannerisms. Gestures are commonly used to send various intentional messages, particularly for emphasis or to represent shapes, size or movement, or may be less consciously self-directed and sometimes distracting to others. Head and shoulder movements are used to convey interest, level of agreement, defiance, submission or ignorance.

Facial expression

Facial expressions provide a running commentary on emotional states according to Argyle (1996) and Ekman & Friesen (1987) identified six standard emotions that are universally recognizable across all cultures by consistent movement of combinations of facial muscles, namely happiness, surprise, anger, fear, disgust and sadness.

Gaze

Eye contact is a universal requirement for engagement and interaction. In Western society listeners look at speakers about twice as much as speakers look at listeners (Argyle 1996), although there are cultural variations to this. When people are dominant or aggressive they tend to look more when they are speaking. The absence of eye contact may indicate embarrassment, disinterest or deception.

Our self-presentation makes statements about our social status, occupation, sexuality and personality. Some aspects of appearance can be easily manipulated, such as our dress and hairstyle, but other features are beyond our control, such as height. Both types of presentation communicate messages about who we are. Nurses have often discussed the merits and disadvantages of wearing a uniform. Uniforms and other forms of regalia make nurses easily identifiable to others and provide additional information about seniority and qualifications. In some contexts, uniforms may be seen to present a barrier to establishing therapeutic relationships by reinforcing the power of the professional in the relationship. Try Activity 7.1.

Activity 7.1

Think about your chosen branch of nursing and the different contexts in which it takes place.

• How does the wearing of a uniform affect relationships with others?

• What are the advantages and disadvantages of wearing a uniform in these different settings?

Paralanguage

Paralinguistics includes those phenomena that appear alongside language, such as accent, tone, volume, pitch, emphasis and speed. It is these refinements of the lexical content that provide meaning to spoken communication. It is possible to use the same actual words but with contrasting paralanguage and convey an entirely different meaning. After reading Case history 7.2, which concerns communication with an unconscious patient, try Activity 7.2.

Case history 7.2

An unconscious patient and communication

Deana is a 48-year-old woman who is in an acute medical ward following a cerebrovascular accident. She has been unconscious for 2 days since she first experienced a bad headache and then gradually became unconscious. Deana’s chances of surviving depend upon how much intracranial bleeding has taken place and whether it has been stopped. All of her physical care is undertaken by the nursing staff. She is entirely reliant on the actions of others for her survival. The nurses are by necessity in total control. Even though she is unconscious, each of the nurses who attend to her physical needs converse with Deanna as they go about their work, either giving information to explain their actions or just chatting to pass the time of day.

Activity 7.2

Reflect on these questions, which relate to Case history 7.2.

• How might you feel about talking to someone like Deana who is unconscious and unable to respond or indicate whether she is hearing or understanding what is being said?

• What other methods of communication might you use with Deana?

• Why do you think the nurses are taking the trouble to talk to Deana?

You might like to discuss your answers with a colleague and then perhaps with your mentor and compare their responses to yours.

Physical environment

The nature and organization of the physical environment in which any communication takes place will have significant impact on the interaction. In Case history 7.2, the patient is completely at the mercy and compassion of her nurses. Fortunately, Deana is blessed by having nurses who are sensitive to her need for sensory stimulation and companionship, even though they are not getting an obvious response from Deana. She may have different nurses who are less aware of the importance of communication, both through touch and through sound, to help her remain orientated, even though she is unable to communicate her level of consciousness. Less skilful or less insightful nurses might treat her as an object that needs specific tasks to be done, so Deana might be treated as a work object rather than a human being, and simply a bed number that needs attention.

Another clear example is the difference between visiting patients in their homes and nursing them in a hospital ward. Being a visitor in a patient’s or client’s home significantly alters the power relationship and thus the locus of control. The nurse has less control over the environment in the patient’s home setting and is a guest. Hospital buildings are unusual settings for most patients and provide consistent reminders that the health care professionals are in control of the environment to a large extent. Wilkinson, 1992 and Wilkinson, 1999 recognized that nursing environments were often not conducive to open communication, even if the nurses were highly skilled in it. Making the most strategic use of facilities available according to the nature and purpose of the exchange is an important aspect of nursing communication.

The functions of non-verbal communication

All of the channels of non-verbal communication described above are extremely important and may constitute up to 90% of the communication taking place. They do of course occur in various combinations with each other, not separately as above. The various functions of non-verbal communication are summarized in Box 7.2.

Box 7.2

The functions of non-verbal communication

• To replace speech. A meaningful glance, a caring touch, a deliberate silence. Also, specific symbolic codes such as Makaton (if you are interested, information about Makaton is readily available on the Internet).

• To complement the verbal message. The main function, and used to add meaning to speech. If we say we are happy we are expected to look happy. We use gestures to provide clarification and emphasis.

• To regulate and control the flow of communication. We generally take turns in conversation by prompts indicating ‘I am finishing, you can take over’. Some may use non-verbal communication to dominate.

• To provide feedback. We monitor others’ non-verbal communication to interpret their reactions; for example, are they listening, worried? Have they understood? There is evidence that patients place great emphasis on non-verbal communication because of the technical nature of some verbal messages given by health carers (Ambady et al 2002, Friedman 1982).

• To help define relationships between people. An example is the wearing of a uniform in the hospital setting to indicate role, function and status.

• To convey emotional states. Emotions and attitudes are recognized primarily on the basis of non-verbal communication.

• To engage and sustain rituals. Argyle (1996) identified an additional function, namely ritualistic. Certain meaningful behaviours are expected at ceremonies such as weddings and graduations.

Observation of channels of communication

So how are humans able to develop all these subtleties in their everyday speech and language use? Why is it important to understand the physiological processes? With these questions in mind, complete Activity 7.3 and Activity 7.4.

Activity 7.3

Next time you are in a public area, take time to observe the non-verbal messages that are being used. Identify the channels and functions of the messages that are exchanged.

Activity 7.4

If you have access to recording equipment, arrange to both audiotape and videotape a short conversation with a fellow student. Replay the interaction in the following order:

• Audiotape (alternatively you could listen to the videotape, facing away from the TV).

• Videotape with the sound turned right down.

• Videotape with the sound.

Make notes as you replay each stage. In particular, contrast the different stages, noting limitations of the first two playbacks. Identify how meaning is changed by additional data.

Speech, hearing and vision: anatomy and physiology

Understanding how we are able to hear, speak and see is important to help you support people who have a deficiency in one or more of these senses. It is useful to remember that normal communication involves all three senses, although we may believe it is our hearing that provides all the information.

Speech and language

Although language is not unique to humans, the use of speech to convey concepts seems to be. So how do we manage to create sounds that are shaped into words to convey concepts such as mathematics, music, history, love and so on?

The physiology of speech can be divided into two distinct entities:

• The mechanics of speech, which involves manipulating air to create vibrations (sounds).

• The neurophysiology of speech, which helps us to create sentences (language construction), to understand what is being said (perception) and which helps us use and control the various muscles involved in speech.

The mechanics of speech

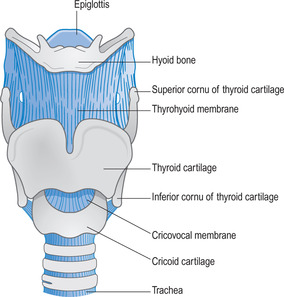

Air is supplied from the lungs by carefully controlling breathing to allow regulated volumes of air to pass through the upper airway passages. The muscles involved are those of normal breathing (i.e. the diaphragm and the intercostal muscles between the ribs). At the top end of the trachea is the larynx (or voice box), which is a hollow structure made mostly from tough cartilage and lined with mucous membrane (Figure 7.2). The narrowest point of the air passage inside the larynx is called the glottis, and across this point are stretched the vocal cords. These vibrate in the air passing across them creating sound. Variations in the pitch of this sound are achieved by changing the tension on the cords; volume is changed by varying the force of air released from the lung across the vocal cords. Some muscles of the larynx attach to the corniculate cartilages (which are mounted on the aretinoid cartilages).

|

| Figure 7.2Anatomy of the larynx.(Reproduced with kind permission from Waugh & Grant 2006.) |

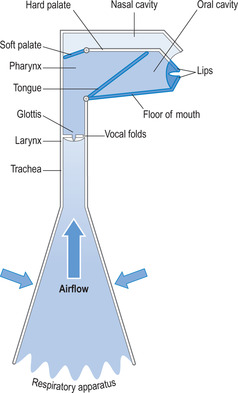

The corniculate and aretinoid cartilages are involved with opening and closing the glottis, and the production of sound. The muscles attached to these cartilages adjust the tension on the cords, changing the vibration frequency, and thus the sound produced. These sounds are not yet words. To achieve this we need the help of the tongue, jaw, lips and mouth. Precise muscle movements of the tongue, throat and lips allow for the shaping of the laryngeal sounds into recognizable words. Figure 7.3 shows in diagrammatic form the various anatomical features involved in vocalizing sounds (Seeley et al 2006).

|

| Figure 7.3The anatomy involved in speech production.(Reproduced with kind permission from Kindlen 2003.) |

Some people who have suffered from a traumatic injury to the neck or have developed cancer of the larynx, have had their larynx removed surgically. This is known as laryngectomy (‘ectomy’ means removal). Without the two cartilages open-ing and closing the glottis, air cannot vibrate as it passes up the throat, leaving the patient without a voice. This represents a major change in ‘body image’ and a huge challenge for the patient, who must learn to speak through other means. Some patients were given a speaking tube that fitted through the neck and allowed air to pass through, thus mimicking the larynx. With modern technology, patients can learn to produce artificial speech from a synthesizer (Blows 2005).

The neurophysiology of speech

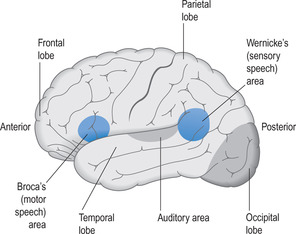

In the part of the brain called the motor cortex, there is an area, known as Broca’s area, which has specific control over the muscles of speech. However, the complexity of speech requires several different groups of muscles to work together, and so neuromuscular control of speech is far more sophisticated than that for other muscles. The brain shows lateralization for speech; that is, only one side of the brain carries out the function of controlling speech muscles. More than 95% of right-handed people have their Broca’s area on the left side of the brain for speech, whereas in left-handed people the figure is about 70%. So, for the majority of people, speech is a left-sided brain function.

Broca’s area is a region in the frontal lobe of the left cerebral cortex located below the primary motor cortex (see Figure 7.4). It appears to be a major site for the coordination of the muscles of speech (i.e. muscles of the tongue, lips and jaws). There may be muscle motor memory stored here that assigns specific muscle movements that will be required for the pronunciation of words. Creating speech is so sophisticated that it requires several related areas in the brain to work together, so near to Broca’s area are deeper structures in the brain tissues that are also involved. These include an area known as the caudate nucleus (part of the basal ganglia), parts of the insular (a region of cortex hidden behind the temporal lobe), the peri-aqueductal grey matter (within the brainstem) and the cerebellum. Studies of people who are mute, or people who cannot make sounds, found that they have damage or lesions in one or other of these areas. It has not yet been possible to determine the exact relationship of these structures or their specific contribution to making speech.

|

| Figure 7.4The location of Broca’s area and Wernicke’s area in the cerebral cortex.(Reproduced with kind permission from Waugh & Grant 2006.) |

Speech and language

Being able to make and shape sounds into speech is only part of the process. Another important aspect is how we create words and sentences and language that is grammatically correct. Learning to shape sounds takes practice as well as the ability to recognize sounds as having specific meanings. We achieve this through another part of the brain, thought to be Wernicke’s area. This seems to be the major site for recognizing and understanding words and producing meaningful speech. Wernicke’s area is located in the posterior part of the auditory association cortex within the left tem-poral lobe (Figure 7.4). The auditory association area lies next to the main auditory cortex, the area that deals with hearing.

Generating language for speech

Language is either spoken or written, and the pathways connecting the various areas of the brain are different for spoken words than for written words. Spoken language involves hearing words (i.e. input to the auditory cortex); vocal responses involve Wernicke’s area, followed by Broca’s area and the main motor cortex to operate the muscles of speech. Written language first involves seeing words written down (i.e. input to the visual cortex); verbalizing those words again involves Wernicke’s area, linked to Broca’s area and the main motor cortex.

Aphasia

Aphasia means the inability to speak, but there are many types of aphasia depending on which part of the brain is involved in the problem. A simple division is to classify aphasia into the two main centres primarily involved: Broca’s aphasia (lesions of Broca’s area) and Wernicke’s aphasia (lesions of Wernicke’s area). In Broca’s aphasia, the patient understands spoken and written words; they know what they want to say, but they have significant difficulty in trying to say it (this is known as ‘expressive aphasia’). In Wernicke’s aphasia the opposite is true; the patient has the ability to speak but lacks the comprehension and understanding of what is said to them or what they want to say (this is known as ‘receptive aphasia’) (Carlson 2004). Stroke, or cerebrovascular accident, occurs when the blood supply to a part of the brain is interrupted, resulting in the death of the brain cells in this part of the brain and thereby affecting the person’s speech or language, or both.

Communicating with people who have speech deficits

As indicated above, aphasia is an impairment of language affecting the production or comprehension of speech and the ability to read or write. Aphasia is caused by injury to the brain, most commonly from a stroke (see Case history 7.3) and particularly in older adults. The aphasia can be so severe as to make communication with the patient almost impossible, or it can be very mild. Expressive aphasics are able to understand what you say, receptive aphasics are not. Some victims may have a bit of both kinds of the impediment. For expressive aphasics, trying to speak is like having a word ‘on the tip of your tongue’ and not being able to call it forth. When communicating with individuals who have aphasia, it is important to be patient and allow plenty of time to communicate and allow the aphasic to try to complete their thoughts, to struggle with words. Avoid being too quick to guess what the person is trying to express. Ask the person how best to communicate and what techniques or devices can be used to aid communication. A pictogram grid can be used as these are useful to ‘fill in’ answers to requests such as ‘I need’ or ‘I want’. The person merely points to the appropriate picture.

Case history 7.3

Communicating with people who have speech deficit as the result of stroke

Mrs Smith, a 65-year-old woman, is in a rehabilitation ward. She was left non-responsive after a massive stroke a year ago. She has been receiving extensive speech and physiotherapy and making slow progress. Mark, a student nurse, is on placement on this ward and finds it is very difficult to communicate with Mrs Smith. He tries to apply the communication skills learned in class but it doesn’t seem to work. Mrs Smith either hardly responds to him or seems to be frustrated when he doesn’t understand what she is trying to say. However, Mark observes his mentor, an experienced nurse who handles the situation very well. Mrs Smith seems to be a different person when she sees the nurse coming to give her care. Mark notices that the nurse shows genuine interest in Mrs Smith’s well-being and speaks to her in simple and caring language. The nurse is very patient with Mrs Smith by being a very good listener with appropriate non-verbal behaviour. Mark can tell that Mrs Smith trusts the nurse and is very relaxed in the nurse’s presence.

After further reading, Mark gained a better understanding of stroke from both biomedical and biopsychosocial perspectives and realized that stroke can affect human lives in a most abrupt manner. He discusses his learning with his mentor, who points out that caring and communicating with people who suffer stroke involves multiple skills and comes with much practice. When oral communication is not possible or minimal, non-verbal cues such as spending time with patients can convey a nurse’s commitment and caring, and furthermore gain the patient’s trust and foster a therapeutic nurse–patient relationship. Mark also learned that each patient is different and should be treated as an individual. Gaining an understanding of stroke and the patient’s experience of living with stroke is imperative in the process of delivering care to and communicating with stroke patients.

Hearing

The receiving and understanding of sound is a vital means of human communication. Contrary to how things appear, we do not ‘hear’ with our ears. The ear is actually a structure designed to convert sound waves into nerve impulses. It is not until these nerve impulses arrive at the conscious brain, the cerebral cortex, do we become conscious of the sound. The part of the cerebral cortex that allows us conscious appreciation of sound is a part of the temporal lobe called the auditory cortex.

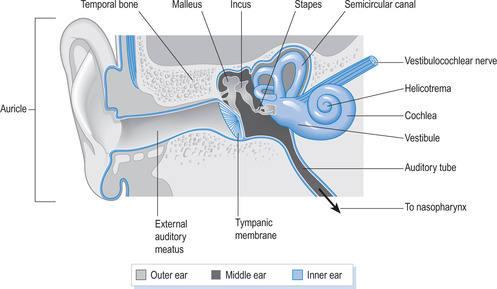

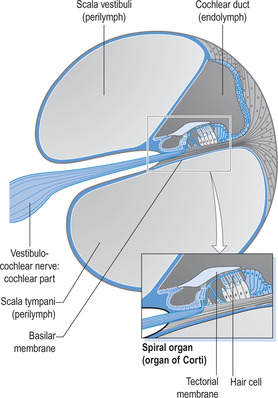

Anatomy of the ear (Figure 7.5)

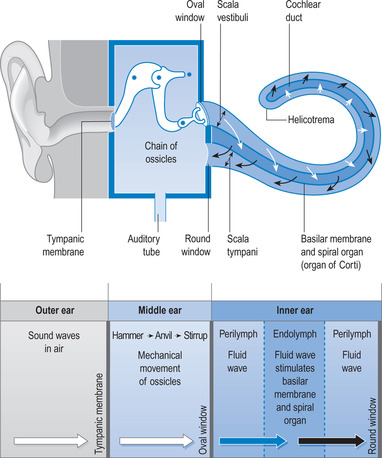

The outer ear

The external ear consists of the auricle (that part seen on the side of the head) and the external auditory meatus (the auditory or ear canal). The ear canal is about 2.5 cm long, but much shorter in children, and is lubricated by a wax-like secretion called cerumen. Blockage of this canal, by a foreign body in children, or by cerumen in adults, can result in significant loss of hearing until the blockage is cleared. At the end of the canal is a delicate membrane called the tympanic membrane (or ear drum).

|

| Figure 7.5Anatomy of the ear.(Reproduced with kind permission from Waugh & Grant 2006.) |

The middle ear

The other side of the membrane is an air-filled cavity called the middle ear. This is connected to the pharynx via a canal called the auditory or eustachian tube. Air can pass up or down this tube as necessary in order to equalize the air pressure on the two sides of the tympanic membrane. This is required to prevent any unequal pressure that would cause the membrane to bulge and perhaps become damaged. The lower end of the eustachian opens into the throat via a valve-like flap which opens on swallowing or chewing. Tympanic damage caused by unequal air pressure changes is called barotrauma and if severe enough can result in complete rupture of the tympanic membrane, causing loss of hearing.

Connecting the tympanic membrane with the inner ear are three tiny bones, the ossicles. They are linked together by joints allowing them to move in relation to vibrations of the membrane. The first bone, attached to the inner surface of the tympanic membrane is the malleus (or hammer). The second bone is the incus (anvil) and the third is the stapes (stirrup). This last bone is anchored to a membranous ‘window’ (called the oval window, or fenestrae ovalis) in the bony cavity that forms the inner ear.

The inner ear

The inner ear consists of a cavity (the bony, or osseous, labyrinth) in the temporal bone of the skull, and a membrane enclosing a space, the membranous cavity, within the bony labyrinth. The membranous cavity is filled with a fluid called endolymph, whilst a slightly different fluid, the perilymph, surrounds the membranous labyrinth and separates it from the bony labyrinth.

The inner ear cavity consists of three main parts. The vestibule is the main central compartment to which the stapes attaches (via the oval window). Extending from the vestibule are two main structures, the cochlear (the organ of hearing) and the semicircular canals (the organ for monitoring balance). The cochlear is subdivided into three compartments by the manner in which the membranous labyrinth is attached to the bony labyrinth. The upper compartment (the scala vestibuli) and the lower compartment (the scala tympani) are outside the membranous labyrinth and therefore contain perilymph. The middle compartment (the cochlear duct) is inside the membranous labyrinth and therefore contains endolymph. Sitting on the lower membrane (the basilar membrane), inside the cochlear duct, is a vibration-sensitive structure, the organ of Corti, the function of which is to convert waveform vibrations into nerve impulses (Figure 7.6). The cochlear is curled, like a snail shell, with the wide end merging with the vestibule, and the narrow end terminating with the helicotrema. The organ of Corti extends the full length of the cochlear and tapers in width, the widest end being close to the vestibule and the narrowest end being at the helicotrema.

|

| Figure 7.6The organ of Corti.(Reproduced with kind permission from Waugh & Grant 2006.) |

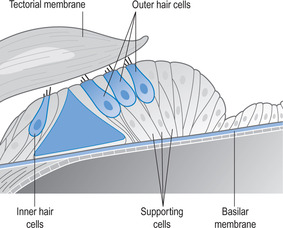

The organ of Corti contains about 1600 epithelial receptor cells, also called hair cells because of their tiny hair-like extensions (called stereocilia). These hair cells are bathed by endolymph and are covered by another membrane, the tectorial membrane, which is attached along one edge to the bony labyrinth of the cochlear. The tips of the stereocilia are embedded in the tectorial membrane, which acts like a roof over the hair cells (Figure 7.7). There are four parallel rows of hair cells along the length of the organ of Corti: three rows together, and a fourth row offset from the others and angled slightly towards them (Shier et al 2004).

|

| Figure 7.7The hair cells of the cochlea.(Reproduced with kind permission from Kindlen 2003.) |

Physiology of hearing

The whole hearing system is sensitive to vibrations. The source of the sound causes vibrations to occur in the air, and these move outwards like ripples on a pond. Such vibrations are funnelled down the auditory canal to the tympanic membrane, which then also vibrates. Tympanic membrane vibrations are transmitted through movements of the ossicles to the oval window, resulting in vibrations occurring in the membrane covering the oval window (Figure 7.8). The purpose of the ossicles is both to amplify the vibrations and to concentrate them onto the oval window. This amplification/concentration effect results in vibrations of the oval window being about 22 times greater than the vibrations of the tympanic membrane. The oval window vibrations cause ripple-like waves in the perilymph of the vestibule. These waves pass down the cochlear in the upper chamber, the scala vestibuli, and are transmitted across the upper membrane to the endolymph within the cochlear duct below. This in turn vibrates the basilar membrane and the organ of Corti on that membrane.

|

| Figure 7.8The physiology of hearing.(Reproduced with kind permission from Waugh & Grant 2006.) |

Different sound frequencies cause vibrations in different parts of the organ of Corti; this is why humans are able to appreciate different sounds. The brain recognizes which hair cells on the organ of Corti are affected by vibrations and which are not. It is then possible for the brain to interpret this as specific sounds. Part of this achievement is due to the differing abilities of the basilar membrane to vibrate throughout its length. At the oval window end, the basilar membrane is stiff and narrow (despite the cochlear being widest at this point) and it vibrates here at a frequency of about 20 000 Hz. At the helicotrema, the basilar membrane is at its widest (although the cochlear is narrow) and more flexible. Here it vibrates at about 20 Hz frequency of sound. Between these two points the basilar membrane varies gradually in width, stiffness and flexibility, allowing for a wide spectrum of sound appreciation.

At rest, the stereocilia on the hair cells are upright and potassium channels in these structures are partly open, allowing small quantities of potassium to enter the hair cells. As a result, the hair cells release low levels of neurotransmitter into the synapse between the hair cell and the neuron below. This neuron then sends low-frequency impulses (also called action potentials) along the nerve to the brain. Endolymph and tectorial membrane vibrations created by incoming sounds cause the stereocilia of the hair cells to bend one way or the other. If they bend towards the longer stereocilia a greater increase in potassium (and calcium) into the hair cell occurs. This causes more neurotransmitter to be released and therefore greater intensity of action potentials sent to the brain. If the stereocilia are bent towards the shorter ones, the potassium input to the hair cells is cut off and no neurotransmitter is released. This results in no action potentials being sent to the brain (Shier et al 2004).

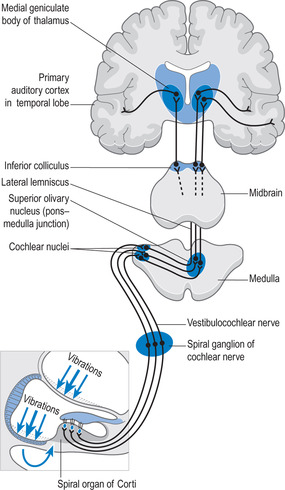

Impulses from the organ of Corti pass along three orders of neurons (Figure 7.9), the first being those of the cochlear nerve. Hair cells are connected to nerve endings that are terminations of the cochlear nerve. This nerve is one branch of the vestibulocochlear nerve, the eighth cranial nerve that passes to the cochlear nuclei of the brainstem. From here, second-order neurons partly cross to the opposite side (known as decussation) to another medullary centre, the superior olivary nucleus. Others pass to the superior olivary nucleus on the same side as their origin. From these nuclei, ascending neurons course through the pons and midbrain and terminate in the medial geniculate nucleus of the thalamus. The third-order pathway is to the conscious brain, the auditory cortex of the temporal lobe, which is part of the cerebral cortex.

|

| Figure 7.9The neurophysiology of hearing. |

The brain has the ability to sort out the many thousands of different impulses sent from the different parts of the organ of Corti, and to interpret these as specific sounds. Part of the brain’s role is to store all previous auditory information in an area called the auditory association area, which surrounds the auditory cortex and acts like a sound library (see also Wernicke’s area under the neurophysiology of speech). The main auditory cortex can access this stored data to assist in the correct analysis and identification of sounds. The brain can also judge the direction from which sound is coming, its distance from the ear, and detect any movements in the sound.

Deafness

There are two main types of deafness: conductive deafness and sensorineural deafness. Conductive deafness is caused by a problem that occurs somewhere along the mechanical pathway that conducts vibrations through the ear. This would mean any disruption of the anatomy or function of the tympanic membrane, the ossicles, and so on. Treatment can be as simple as unblocking the external canal to more complicated surgery. Sensorineural deafness is caused by any problem occurring in the neurological pathways that convey impulses from the organ of Corti to the brain. Treatment usually involves the use of a hearing aid to boost stimulation of the organ of Corti.

Communicating with people who have hearing deficits

Hearing-impaired people need to supplement hearing with lip-reading. It is important, therefore, to provide a conducive environment with reduced background noise and proper lighting to allow patients/clients to lip-read. There are a few things you can do to make it easier for the patient/client to lip-read: for example, face the patient when speaking, keep your hands away from your mouth while speaking and do not exaggerate lip movements when speaking. Gestures and visual aids can also be very helpful. If the person wears a hearing aid and still has difficulty hearing, check to see if the hearing aid is in the person’s ear. Also check to see that it is turned on, adjusted and has a working battery. If these things are fine and the person still has difficulty hearing, find out when they last had a hearing evaluation. Keep in mind that people with hearing deficit hear and understand less well when they are tired or ill. If the person has difficulty understanding something, find a different way of saying the same thing, rather than repeating the original words over and over. Write messages if the person can read and be concise with your statements and questions.

Vision

Analogous to what was stated about the ear, we do not actually ‘see’ with the eye. The eye is a mechanism for converting light intensity into nerve impulses. We only see the world around us when those impulses arrive at the conscious brain, the cerebral cortex.

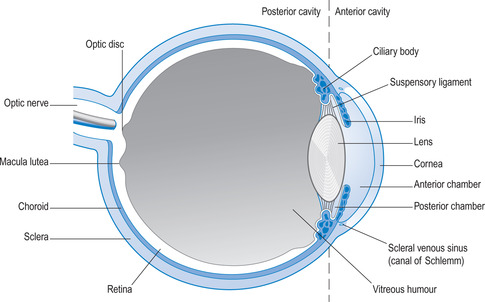

Anatomy of the eye

The eye is mounted inside a bony socket called the orbit. Each eye is moved around in the orbit by six muscles controlled by three cranial nerves from the brainstem (Blows 2001). The internal structure divides the eye into two main compartments: the anterior and posterior cavities (Figure 7.10). These are fluid-filled compartments; the anterior cavity contains aqueous (water-like) humour, the posterior cavity contains vitreous (jelly-like) humour. Both fluids are crystal clear to allow for the passage of light.

|

| Figure 7.10Section through the eye.(Reproduced with kind permission from Waugh & Grant 2006.) |

The wall of the eye is composed of three layers: the outer sclera (the white of the eye), the middle choroid coat (a vascular layer) and the inner retina (the light-sensitive layer). At the front of the eye, the sclera becomes a round clear patch (the cornea), which bulges forwards slightly due to the pressure of the aqueous humour of the anterior cavity. Also towards the front, the choroid layer becomes the ciliary body, the base to which suspensory ligaments attach for holding the lens. Suspensory ligaments play a vital role in changing the shape of the lens for the purpose of accommodation, which is the ability to focus on objects at different distances. Accommodation is automatic, governed by the brainstem via the third cranial nerve. Problems with accommodation are not uncommon. Myopia is near-sightedness (i.e. the ability to see close up but distance vision is blurred). The opposite is hypermetropia (hyperopia; i.e. far-sightedness, where close objects are seen as blurred but distance vision is clear). Presbyopia is a natural age-related change in the lens resulting in a gradual loss of normal accommodation. Like all ageing processes it is not preventable. The ‘near-point’ is the closest an object can get to the eye and remain in focus. In presbyopia, the near-point extends gradually further away, from a normal 23 cm to sometimes an arm’s length or more. Fortunately, accommodation errors can be corrected with suitable glasses (Seeley et al 2006).

Extending forwards from the ciliary body is the iris, which divides the anterior cavity into an anterior chamber and a posterior chamber. The aqueous humour that fills both is produced from the ciliary body and flows forwards through the pupil, the opening at the centre of the iris, and is reabsorbed by a canal (the canal of Schlemm) in the anterior chamber. In this way, the aqueous humour is constantly being renewed, being derived from blood plasma and returning to blood plasma. The iris can close the pupil (in bright light) or open the pupil (in dull light) in order to regulate the amount of light falling on the retina. Like accommodation, this action is automatic, being controlled by the third cranial nerve.

The retina is unique in one respect: it is the only part of the nervous system that is visible from the outside world. It is formed during embryological life as a forward growth from the brain, and can be viewed using an ophthalmoscope. It is the light-sensitive layer that converts light into nerve impulses. These travel to the brain via the optic nerve (the second cranial nerve).

Physiology of vision

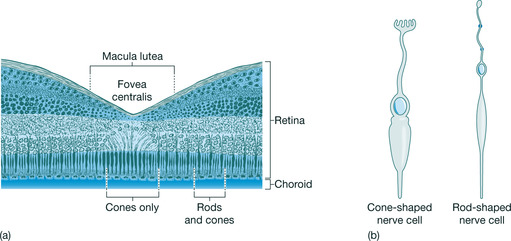

Light passes through the cornea and the pupil, and is concentrated and focused on the retina by the lens. The retina has two main cell types: rods and cones (Figure 7.11).

|

| Figure 7.11The cellular structure of the retina (b) the light-sensitive cells of the retina.(Reproduced with kind permission from Waugh & Grant 2006.) |

The rods are specialized retinal cells for responding to black and white and to night (low-light) conditions; cones are similar cells for responding to colour and to day (bright-light) conditions. Rods have a high sensitivity to light (this is needed in low-light situations) and there are many of them (100 000 000, or 108, per retina). Compare this to the cones, which have low sensitivity to light (suitable for bright light) and are fewer in number (3 000 000, or 106, per retina); cones do not respond at all well in low-light situations. There are three types of cones (red, green and blue), providing a full spectral colour range; they are more concentrated at the fovea (Figure 7.11). Both rods and cones carry out phototransduction, which is the conversion of light energy to nerve impulses. This involves complex chemical changes starting with a light-sensitive pigment called rhodopsin (or visual purple) which is partly produced from vitamin A.

Rods and cones are attached to a second layer of cells (the bipolar cells), which in turn are connected to ganglion cells leading to the optic nerve. The surprising aspect is the manner in which these layers occur in the retina. The rods and cones are the innermost layer (towards the choroid), and the ganglion cells are the outermost layer (nearer to the vitreous humour) (Figure 7.11). This means that light striking the retina must first pass the ganglion and bipolar cells before reaching the rods and cones. Convergence (i.e. several rods linked to a single bipolar cell) occurs across the retina; this also applies to cones but to a lesser extent.

Another surprise is that light deactivates the rods and cones; that is, light switches them ‘off’ not ‘on’. In the dark, both rods and cones produce a neurotransmitter at the synapses between them and the bipolar cells. This chemical increases activity in the bipolar cells, which in turn affects the activity in ganglion cells. Some bipolar–ganglionic synapses are excitatory, increasing ganglionic activity; others are inhibitory, decreasing activity. In the light, the rods and cones produce far less neurotransmitter and therefore the bipolar and ganglion cells are less affected by them. In this way, variations in light or dark on the retina are communicated to the ganglion cells, and thus to the optic nerve (Shier et al 2004).

The neurophysiology of vision

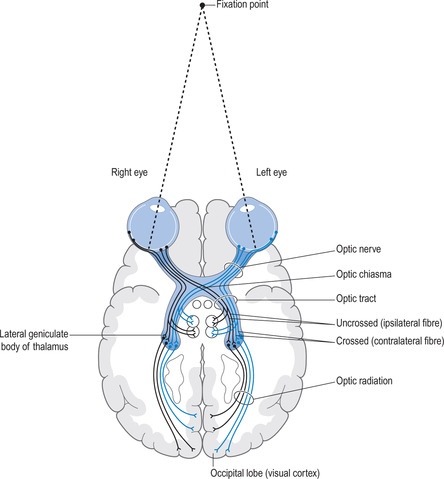

Impulses generated by the retina travel along the optic nerve and arrive at the optic chiasma (Figure 7.12). Here the two optic nerves join, allowing the lateral half of each retinal output to remain on the same side, while the medial halves (nearest the nose) cross to the opposite side (known as decussation). From here backwards the visual pathways (called optic tracts) are contained within the brain. The left optic tract carries impulses from the left lateral and right medial retinas; the right optic tract carries impulses from the right lateral and left medial retinas. These pass to part of the thalamus called the lateral geniculate nucleus, and from there they pass via the optic radiations to the visual cortex in the occipital lobe of the cerebrum. The impulses arrive upside down and split between the two sides, which would create a very strange view of the world.

|

| Figure 7.12The neurophysiology of vision. |

The visual cortex reassembles the picture into the view we are familiar with. Around the visual cortex is the visual association area, which allows for the identification of visual stimuli. So, if you look at a chair, you know it is a chair because the new visual stimulus will be compared with previous stimuli stored in the visual association area.

< div class='tao-gold-member'>

Only gold members can continue reading. Log In or Register to continue

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree

Get Clinical Tree app for offline access